Copyright notice

Journals

2025

161:111304 May 2025

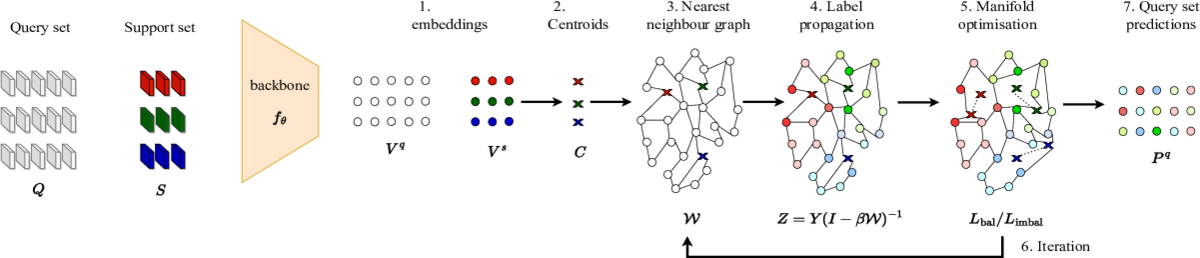

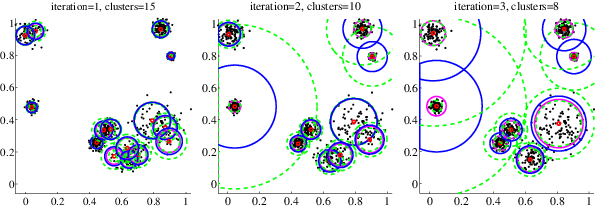

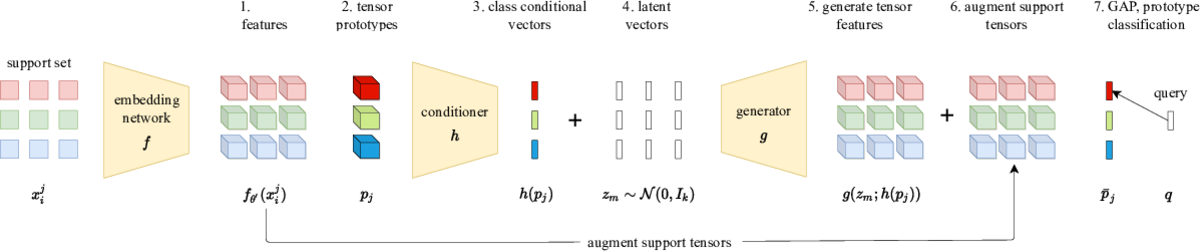

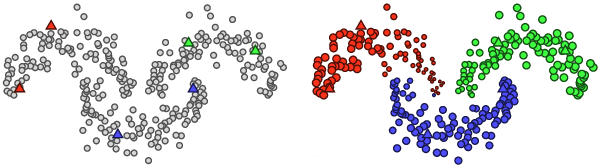

Few-shot learning investigates how to solve novel tasks given limited labeled data. Exploiting unlabeled data along with the limited labeled has shown substantial improvement in performance. In this work we propose a novel algorithm that exploits unlabeled data in order to improve the performance of few-shot learning. We focus on transductive few-shot inference, where the entire test set is available at inference time, and semi-supervised few-shot learning where unlabeled data are available and can be exploited. Our algorithm starts by leveraging the manifold structure of the labeled and unlabeled data in order to assign accurate pseudo-labels to the unlabeled data. Iteratively, it selects the most confident pseudo-labels and treats them as labeled improving the quality of pseudo-labels at every iteration. Our method surpasses or matches the state of the art results on four benchmark datasets, namely miniImageNet, tieredImageNet, CUB and CIFAR-FS, while being robust over feature pre-processing and the quantity of available unlabeled data. Furthermore, we investigate the setting where the unlabeled data contains data from distractor classes and propose ideas to adapt our algorithm achieving new state of the art performance in the process. Specifically, we utilize the unnormalized manifold class similarities obtained from label propagation for pseudo-label cleaning and exploit the uneven pseudo-label distribution between classes to remove noisy data. The publicly available source code can be found at https://github.com/MichalisLazarou/iLPC.

@article{J34,

title = {Exploiting unlabeled data in few-shot learning},

author = {Lazarou, Michalis and Stathaki, Tania and Avrithis, Yannis},

journal = {Pattern Recognition (PR)},

volume = {161},

pages = {111304},

month = {5},

year = {2025}

}2024

32:5010-5023 Nov 2024

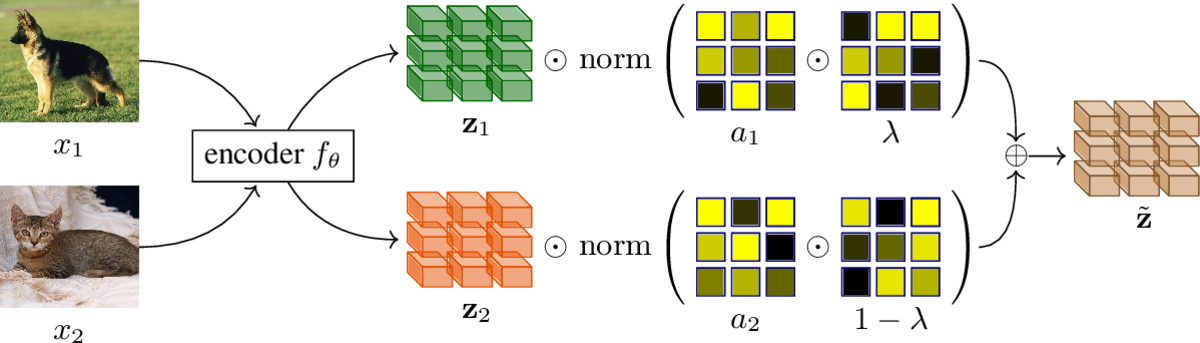

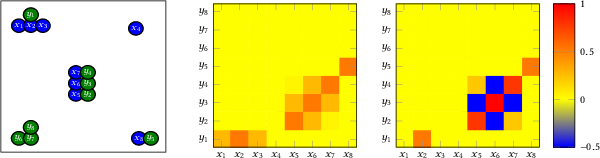

Multimodal sentiment analysis (MSA) leverages heterogeneous data sources to interpret the complex nature of human sentiments. Despite significant progress in multimodal architecture design, the field lacks comprehensive regularization methods. This paper introduces PowMix, a versatile embedding space regularizer that builds upon the strengths of unimodal mixing-based regularization approaches and introduces novel algorithmic components that are specifically tailored to multimodal tasks. PowMix is integrated before the fusion stage of multimodal architectures and facilitates intra-modal mixing, such as mixing text with text, to act as a regularizer. PowMix consists of five components: 1) a varying number of generated mixed examples, 2) mixing factor reweighting, 3) anisotropic mixing, 4) dynamic mixing, and 5) cross-modal label mixing. Extensive experimentation across benchmark MSA datasets and a broad spectrum of diverse architectural designs demonstrate the efficacy of PowMix, as evidenced by consistent performance improvements over baselines and existing mixing methods. An in-depth ablation study highlights the critical contribution of each PowMix component and how they synergistically enhance performance. Furthermore, algorithmic analysis demonstrates how PowMix behaves in different scenarios, particularly comparing early versus late fusion architectures. Notably, PowMix enhances overall performance without sacrificing model robustness or magnifying text dominance. It also retains its strong performance in situations of limited data. Our findings position PowMix as a promising versatile regularization strategy for MSA.

@article{J33,

title = {{PowMix}: A Versatile Regularizer for Multimodal Sentiment Analysis},

author = {Georgiou, Efthymios and Avrithis, Yannis and Potamianos, Alexandros},

journal = {IEEE Transactions on Audio, Speech and Language Processing (TASL)},

volume = {32},

pages = {5010--5023},

month = {11},

year = {2024}

}248:104101 Nov 2024

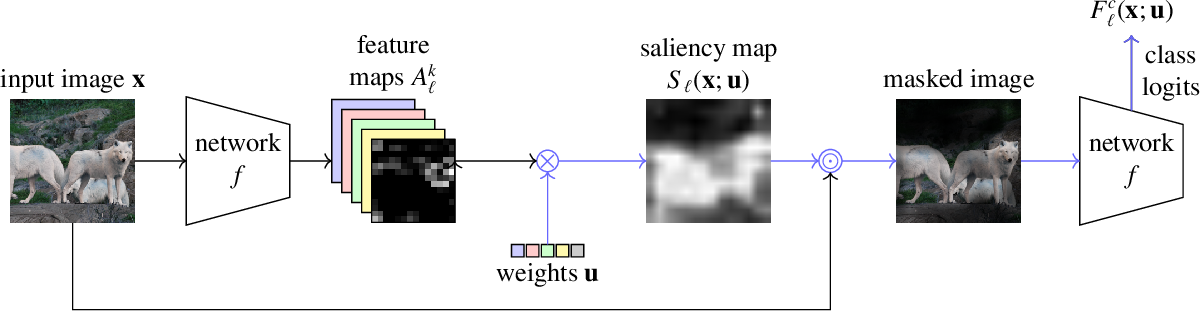

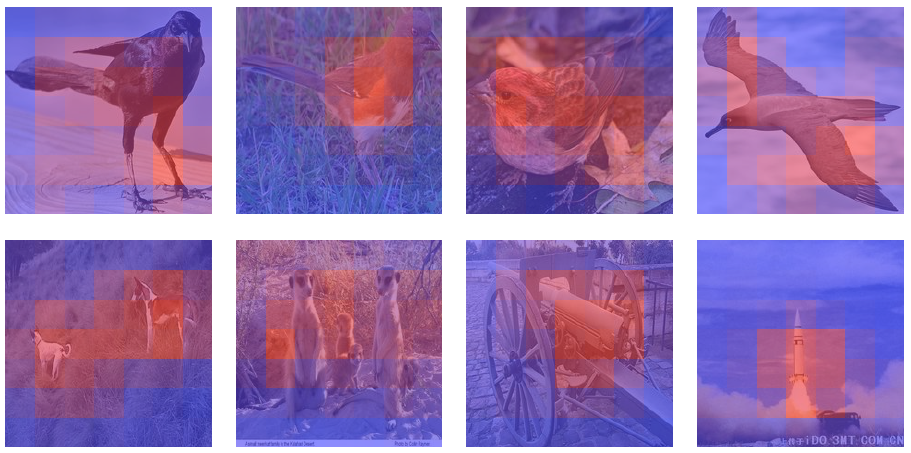

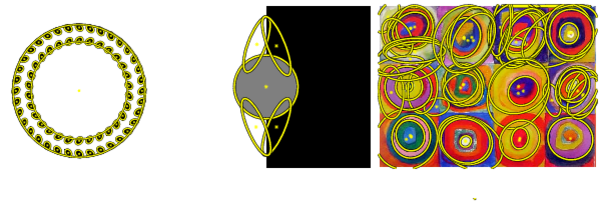

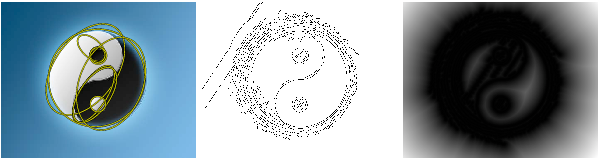

Methods based on class activation maps (CAM) provide a simple mechanism to interpret predictions of convolutional neural networks by using linear combinations of feature maps as saliency maps. By contrast, masking-based methods optimize a saliency map directly in the image space or learn it by training another network on additional data.

In this work we introduce Opti-CAM, combining ideas from CAM-based and masking-based approaches. Our saliency map is a linear combination of feature maps, where weights are optimized per image such that the logit of the masked image for a given class is maximized. We also fix a fundamental flaw in two of the most common evaluation metrics of attribution methods. On several datasets, Opti-CAM largely outperforms other CAM-based approaches according to the most relevant classification metrics. We provide empirical evidence supporting that localization and classifier interpretability are not necessarily aligned.

@article{J32,

title = {Opti-{CAM}: Optimizing saliency maps for interpretability},

author = {Zhang, Hanwei and Torres, Felipe and Sicre, Ronan and Avrithis, Yannis and Ayache, Stephane},

journal = {Computer Vision and Image Understanding (CVIU)},

volume = {248},

pages = {104101},

month = {11},

year = {2024}

}2021

120:108-164 Dec 2021

Weakly-supervised object detection attempts to limit the amount of supervision by dispensing the need for bounding boxes, but still assumes image-level labels on the entire training set. In this work, we study the problem of training an object detector from one or few images with image-level labels and a larger set of completely unlabeled images. This is an extreme case of semi-supervised learning where the labeled data are not enough to bootstrap the learning of a detector. Our solution is to train a weakly-supervised student detector model from image-level pseudo-labels generated on the unlabeled set by a teacher classifier model, bootstrapped by region-level similarities to labeled images. Building upon the recent representative weakly-supervised pipeline PCL, our method can use more unlabeled images to achieve performance competitive or superior to many recent weakly-supervised detection solutions.

@article{J31,

title = {Training Object Detectors from Few Weakly-Labeled and Many Unlabeled Images},

author = {Yang, Zhaohui and Shi, Miaojing and Xu, Chao and Ferrari, Vittorio and Avrithis, Yannis},

journal = {Pattern Recognition (PR)},

volume = {120},

pages = {108--164},

month = {12},

year = {2021}

}16:701-713 Sep 2021

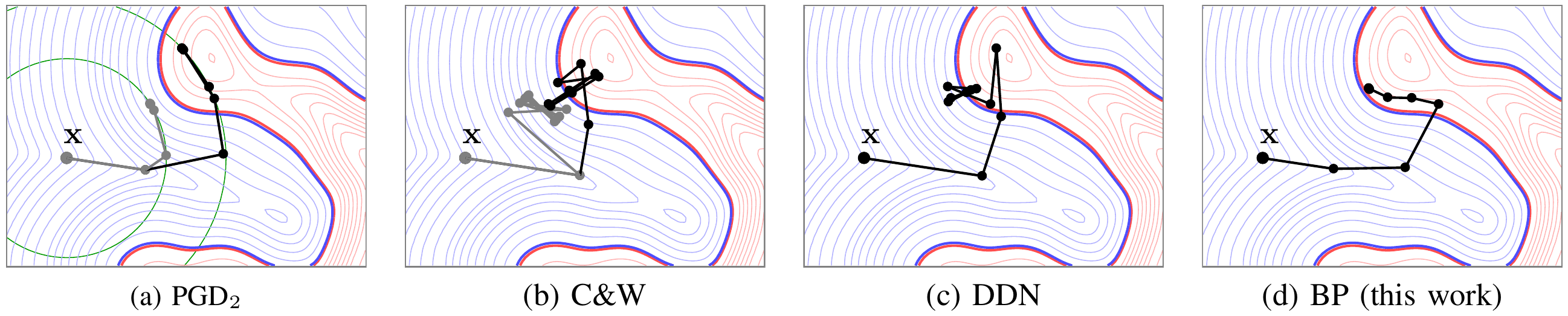

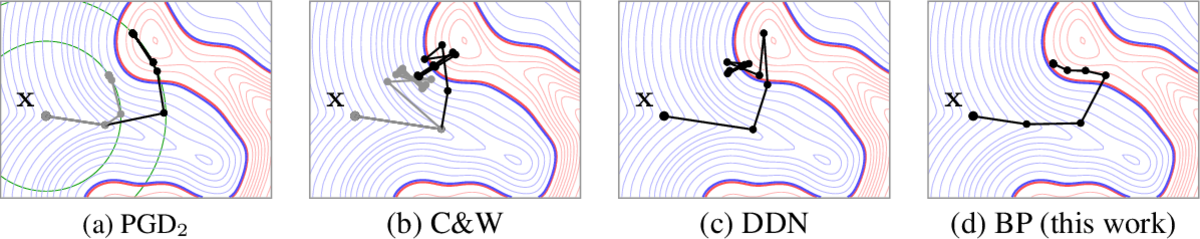

Adversarial examples of deep neural networks are receiving ever increasing attention because they help in understanding and reducing the sensitivity to their input. This is natural given the increasing applications of deep neural networks in our everyday lives. When white-box attacks are almost always successful, it is typically only the distortion of the perturbations that matters in their evaluation. In this work, we argue that speed is important as well, especially when considering that fast attacks are required by adversarial training. Given more time, iterative methods can always find better solutions. We investigate this speed-distortion trade-off in some depth and introduce a new attack called boundary projection (BP) that improves upon existing methods by a large margin. Our key idea is that the classification boundary is a manifold in the image space: we therefore quickly reach the boundary and then optimize distortion on this manifold.

@article{J30,

title = {Walking on the Edge: Fast, Low-Distortion Adversarial Examples},

author = {Zhang, Hanwei and Avrithis, Yannis and Furon, Teddy and Amsaleg, Laurent},

journal = {IEEE Transactions on Information Forensics and Security (TIFS)},

volume = {16},

pages = {701--713},

month = {9},

year = {2021}

}2020

2020:15-26 Nov 2020

This paper investigates the visual quality of the adversarial examples. Recent papers propose to smooth the perturbations to get rid of high frequency artefacts. In this work, smoothing has a different meaning as it perceptually shapes the perturbation according to the visual content of the image to be attacked. The perturbation becomes locally smooth on the flat areas of the input image, but it may be noisy on its textured areas and sharp across its edges.

This operation relies on Laplacian smoothing, well-known in graph signal processing, which we integrate in the attack pipeline. We benchmark several attacks with and without smoothing under a white-box scenario and evaluate their transferability. Despite the additional constraint of smoothness, our attack has the same probability of success at lower distortion.

@article{J29,

title = {Smooth Adversarial Examples},

author = {Zhang, Hanwei and Avrithis, Yannis and Furon, Teddy and Amsaleg, Laurent},

journal = {EURASIP Journal on Information Security (JIS)},

volume = {2020},

pages = {15--26},

month = {11},

year = {2020}

}2019

30(2):243-254 Mar 2019

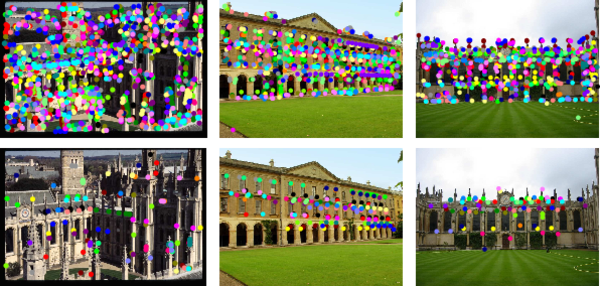

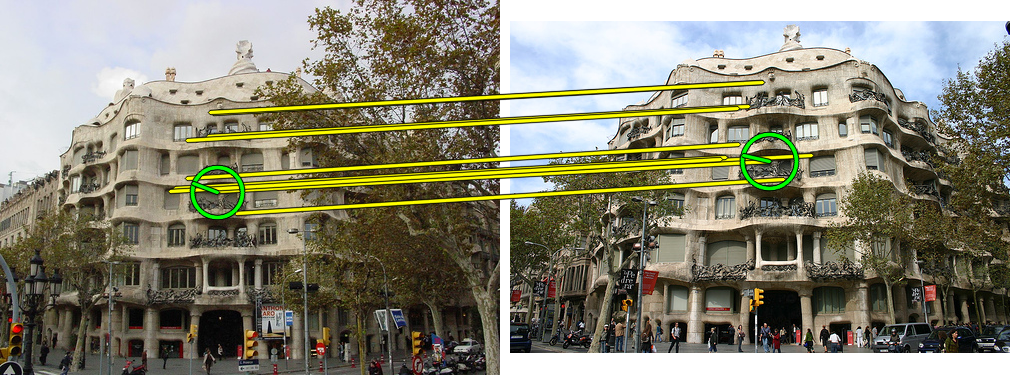

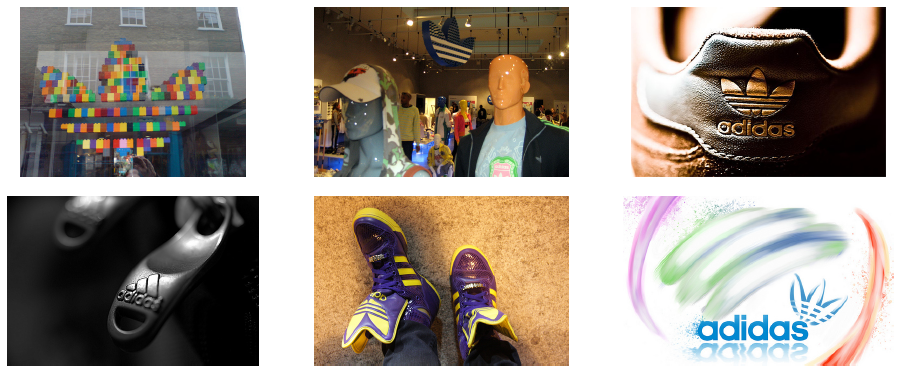

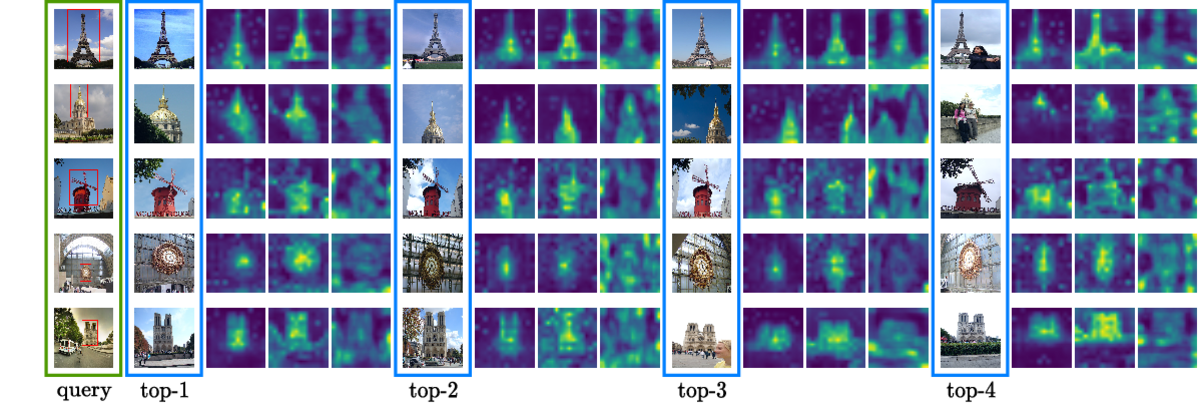

Severe background clutter is challenging in many computer vision tasks, including large-scale image retrieval. Global descriptors, that are popular due to their memory and search efficiency, are especially prone to corruption by such a clutter. Eliminating the impact of the clutter on the image descriptor increases the chance of retrieving relevant images and prevents topic drift due to actually retrieving the clutter in the case of query expansion. In this work, we propose a novel salient region detection method. It captures, in an unsupervised manner, patterns that are both discriminative and common in the dataset. Saliency is based on a centrality measure of a nearest neighbor graph constructed from regional CNN representations of dataset images. The proposed method exploits recent CNN architectures trained for object retrieval to construct the image representation from the salient regions. We improve particular object retrieval on challenging datasets containing small objects.

@article{J28,

title = {Graph-based Particular Object Discovery},

author = {Sim\'eoni, Oriane and Iscen, Ahmet and Tolias, Giorgos and Avrithis, Yannis and Chum, Ond\v{r}ej},

journal = {Machine Vision and Applications (MVA)},

volume = {30},

number = {2},

month = {3},

pages = {243--254},

year = {2019}

}179:66-78 Feb 2019

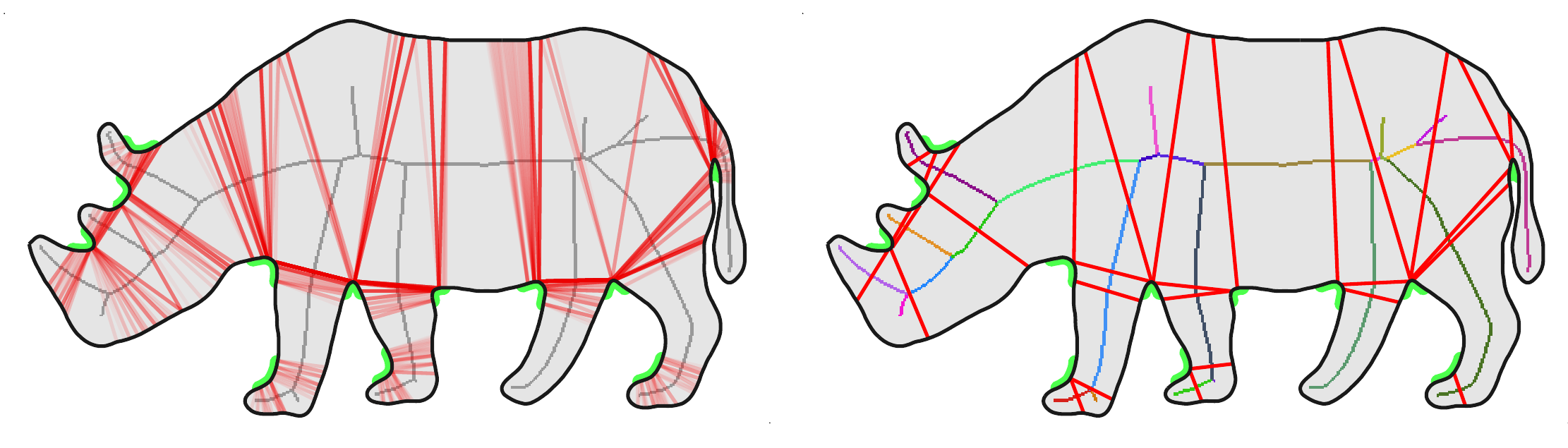

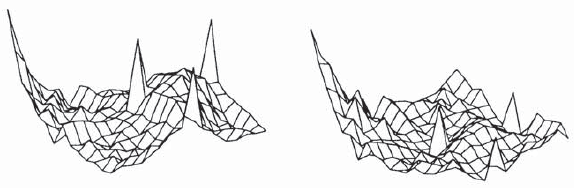

We present a simple computational model for planar shape decomposition that naturally captures most of the rules and salience measures suggested by psychophysical studies, including the minima and short-cut rules, convexity, and symmetry. It is based on a medial axis representation in ways that have not been explored before and sheds more light into the connection between existing rules like minima and convexity. In particular, vertices of the exterior medial axis directly provide the position and extent of negative minima of curvature, while a traversal of the interior medial axis directly provides a small set of candidate endpoints for part-cuts. The final selection follows a prioritized processing of candidate part-cuts according to a local convexity rule that can incorporate arbitrary salience measures. Neither global optimization nor differentiation is involved. We provide qualitative and quantitative evaluation and comparisons on ground-truth data from psychophysical experiments. With our single computational model, we outperform even an ensemble method on several other competing models.

@article{J27,

title = {Revisiting the Medial Axis for Planar Shape Decomposition},

author = {Papanelopoulos, Nikos and Avrithis, Yannis and Kollias, Stefanos},

journal = {Computer Vision and Image Understanding (CVIU)},

volume = {179},

month = {2},

pages = {66--78},

year = {2019}

}2016

50(1):56-73 Feb 2016

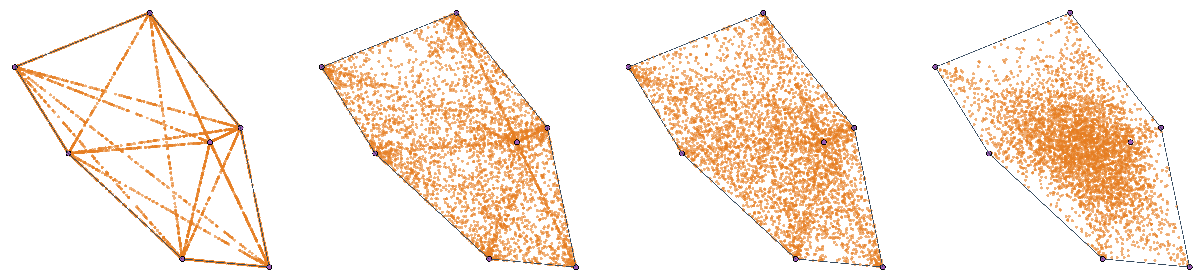

Local image features are routinely used in state-of-the-art methods to solve many computer vision problems like image retrieval, classification, or 3D registration. As the applications become more complex, the research for better visual features is still active. In this paper we present a feature detector that exploits the inherent geometry of sampled image edges using α-shapes. We propose a novel edge sampling scheme that exploits local shape and investigate different triangulations of sampled points. We also introduce a novel approach to represent the anisotropy in a triangulation along with different feature selection methods. Our detector provides a small number of distinctive features that is ideal for large scale applications, while achieving competitive performance in a series of matching and retrieval experiments.

@article{J26,

title = {$\alpha$-shapes for local feature detection},

author = {Varytimidis, Christos and Rapantzikos, Konstantinos and Avrithis, Yannis and Kollias, Stefanos},

journal = {Pattern Recognition (PR)},

volume = {50},

number = {1},

month = {2},

pages = {56--73},

year = {2016}

}116(3):247-261 Feb 2016

This paper considers a family of metrics to compare images based on their local descriptors. It encompasses the VLAD descriptor and matching techniques such as Hamming Embedding. Making the bridge between these approaches leads us to propose a match kernel that takes the best of existing techniques by combining an aggregation procedure with a selective match kernel. The representation underpinning this kernel is approximated, providing a large scale image search both precise and scalable, as shown by our experiments on several benchmarks.

We show that the same aggregation procedure, originally applied per image, can effectively operate on groups of similar features found across multiple images. This method implicitly performs feature set augmentation, while enjoying savings in memory requirements at the same time. Finally, the proposed method is shown effective for place recognition, outperforming state of the art methods on a large scale landmark recognition benchmark.

@article{J25,

title = {Image search with selective match kernels: aggregation across single and multiple images},

author = {Tolias, Giorgos and Avrithis, Yannis and J\'egou, Herv\'e},

journal = {International Journal of Computer Vision (IJCV)},

volume = {116},

number = {3},

month = {2},

pages = {247--261},

year = {2016}

}2015

7(1):189-200 Dec 2015

Local feature detection has been an essential part of many methods for computer vision applications like large scale image retrieval, object detection, or tracking. Recently, structure-guided feature detectors have been proposed, exploiting image edges to accurately capture local shape. Among them, the WαSH detector [Varytimidis et al., 2012] starts from sampling binary edges and exploits α-shapes, a computational geometry representation that describes local shape in different scales. In this work, we propose a novel image sampling method, based on dithering smooth image functions other than intensity. Samples are extracted on image contours representing the underlying shapes, with sampling density determined by image functions like the gradient or Hessian response, rather than being fixed. We thoroughly evaluate the parameters of the method, and achieve state-of-the-art performance on a series of matching and retrieval experiments.

@article{J24,

title = {Dithering-based Sampling and Weighted $\alpha$-shapes for Local Feature Detection},

author = {Varytimidis, Christos and Rapantzikos, Konstantinos and Avrithis, Yannis and Kollias, Stefanos},

journal = {IPSJ Transactions on Computer Vision and Applications (CVA)},

publisher = {Information Processing Society of Japan},

volume = {7},

number = {1},

month = {12},

pages = {189--200},

year = {2015}

}2014

120:31-45 Mar 2014

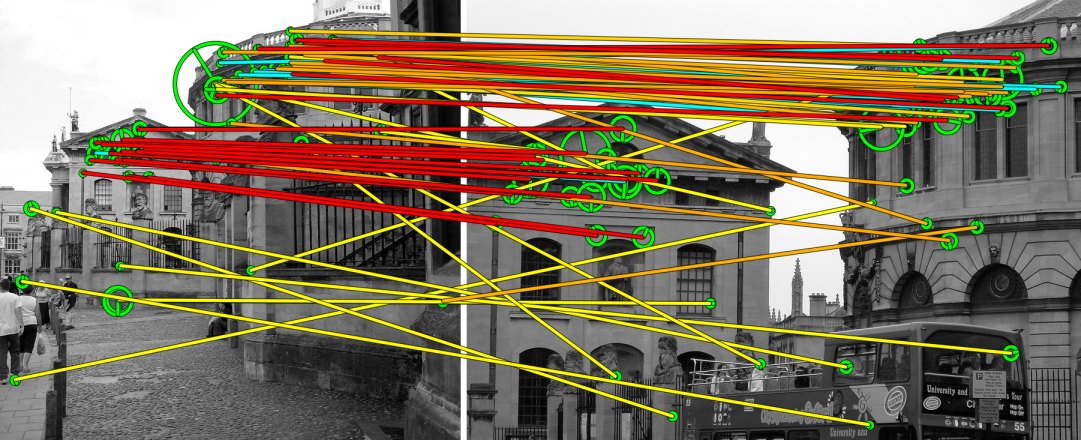

We present a new approach to image indexing and retrieval, which integrates appearance with global image geometry in the indexing process, while enjoying robustness against viewpoint change, photometric variations, occlusion, and background clutter. We exploit shape parameters of local features to estimate image alignment via a single correspondence. Then, for each feature, we construct a sparse spatial map of all remaining features, encoding their normalized position and appearance, typically vector quantized to visual word. An image is represented by a collection of such feature maps and RANSAC-like matching is reduced to a number of set intersections. The required index space is still quadratic in the number of features. To make it linear, we propose a novel feature selection model tailored to our feature map representation, replacing our earlier hashing approach. The resulting index space is comparable to baseline bag-of-words, scaling up to one million images while outperforming the state of the art on three publicly available datasets. To our knowledge, this is the first geometry indexing method to dispense with spatial verification at this scale, bringing query times down to milliseconds.

@article{J23,

title = {Towards large-scale geometry indexing by feature selection},

author = {Tolias, Giorgos and Kalantidis, Yannis and Avrithis, Yannis and Kollias, Stefanos},

journal = {Computer Vision and Image Understanding (CVIU)},

volume = {120},

pages = {31--45},

month = {03},

year = {2014}

}107(1):1-19 Mar 2014

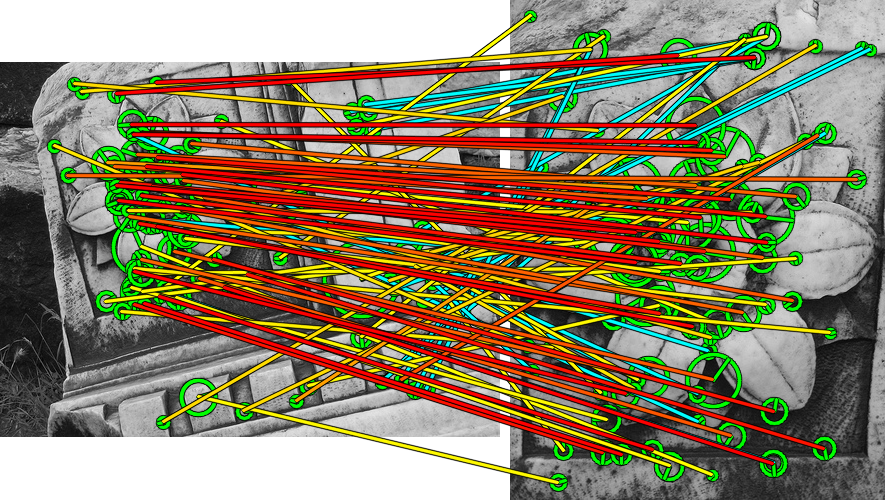

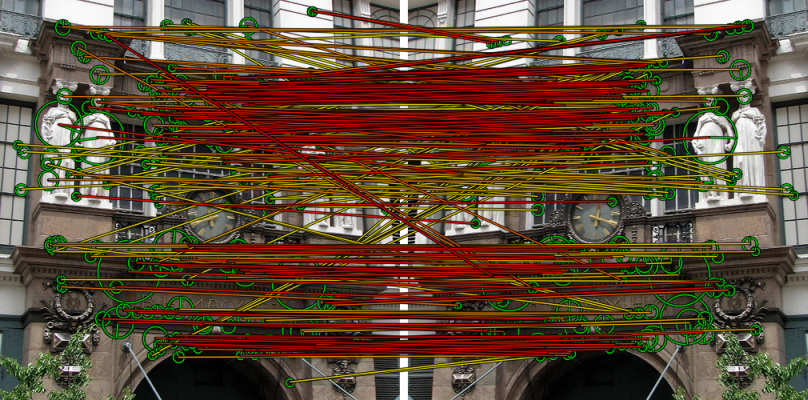

Exploiting local feature shape has made geometry indexing possible, but at a high cost of index space, while a sequential spatial verification and re-ranking stage is still indispensable for large scale image retrieval. In this work we investigate an accelerated approach for the latter problem. We develop a simple spatial matching model inspired by Hough voting in the transformation space, where votes arise from single feature correspondences. Using a histogram pyramid, we effectively compute pair-wise affinities of correspondences without ever enumerating all pairs. Our Hough pyramid matching algorithm is linear in the number of correspondences and allows for multiple matching surfaces or non-rigid objects under one-to-one mapping. We achieve re-ranking one order of magnitude more images at the same query time with superior performance compared to state of the art methods, while requiring the same index space. We show that soft assignment is compatible with this matching scheme, preserving one-to-one mapping and further increasing performance.

@article{J22,

title = {Hough Pyramid Matching: Speeded-up geometry re-ranking for large scale image retrieval},

author = {Avrithis, Yannis and Tolias, Giorgos},

journal = {International Journal of Computer Vision (IJCV)},

volume = {107},

number = {1},

month = {3},

pages = {1--19},

year = {2014}

}2013

15(7):1553-1568 Nov 2013

Multimodal streams of sensory information are naturally parsed and integrated by humans using signal-level feature extraction and higher-level cognitive processes. Detection of attention-invoking audiovisual segments is formulated in this work on the basis of saliency models for the audio, visual and textual information conveyed in a video stream. Aural or auditory saliency is assessed by cues that quantify multifrequency waveform modulations, extracted through nonlinear operators and energy tracking. Visual saliency is measured through a spatiotemporal attention model driven by intensity, color and orientation. Textual or linguistic saliency is extracted from part-of-speech tagging on the subtitles information available with most movie distributions. The individual saliency streams, obtained from modality-depended cues, are integrated in a multimodal saliency curve, modeling the time-varying perceptual importance of the composite video stream and signifying prevailing sensory events. The multimodal saliency representation forms the basis of a generic, bottom-up video summarization algorithm. Different fusion schemes are evaluated on a movie database of multimodal saliency annotations with comparative results provided across modalities. The produced summaries, based on low-level features and content-independent fusion and selection, are of subjectively high aesthetic and informative quality.

@article{J21,

title = {Multimodal Saliency and Fusion for Movie Summarization based on Aural, Visual, and Textual Attention},

author = {Evangelopoulos, Georgios and Zlatintsi, Athanasia and Potamianos, Alexandros and Maragos, Petros and Rapantzikos, Konstantinos and Skoumas, Georgios and Avrithis, Yannis},

journal = {IEEE Transactions on Multimedia (TMM)},

volume = {15},

number = {7},

month = {11},

pages = {1553--1568},

year = {2013}

}2011

Special issue on Saliency, Attention, Visual Search and Picture Scanning

3(1):167-184 Mar 2011

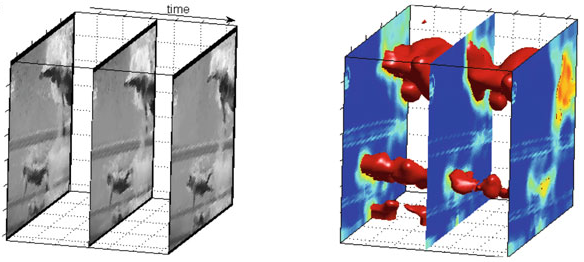

Although the mechanisms of human visual understanding remain partially unclear, computational models inspired by existing knowledge on human vision have emerged and applied to several fields. In this paper, we propose a novel method to compute visual saliency from video sequences by counting in the actual spatiotemporal nature of the video. The visual input is represented by a volume in space–time and decomposed into a set of feature volumes in multiple resolutions. Feature competition is used to produce a saliency distribution of the input implemented by constrained minimization. The proposed constraints are inspired by and associated with the Gestalt laws. There are a number of contributions in this approach, namely extending existing visual feature models to a volumetric representation, allowing competition across features, scales and voxels, and formulating constraints in accordance with perceptual principles. The resulting saliency volume is used to detect prominent spatiotemporal regions and consequently applied to action recognition and perceptually salient event detection in video sequences. Comparisons against established methods on public datasets are given and reveal the potential of the proposed model. The experiments include three action recognition scenarios and salient temporal segment detection in a movie database annotated by humans.

@article{J20,

title = {Spatiotemporal features for action recognition and salient event detection},

author = {Rapantzikos, Konstantinos and Avrithis, Yannis and Kollias, Stefanos},

journal = {Cognitive Computation (CC) (Special Issue on Saliency, Attention, Visual Search and Picture Scanning)},

editor = {J.G. Taylor and V. Cutsuridis},

volume = {3},

number = {1},

month = {3},

pages = {167--184},

year = {2011}

}51(2):555-592 Jan 2011

New applications are emerging every day exploiting the huge data volume in community photo collections. Most focus on popular subsets, e.g. images containing landmarks or associated to Wikipedia articles. In this work we are concerned with the problem of accurately finding the location where a photo is taken without needing any metadata, that is, solely by its visual content. We also recognize landmarks where applicable, automatically linking to Wikipedia. We show that the time is right for automating the geo-tagging process, and we show how this can work at large scale. In doing so, we do exploit redundancy of content in popular locations - but unlike most existing solutions, we do not restrict to landmarks. In other words, we can compactly represent the visual content of all thousands of images depicting e.g. the Parthenon and still retrieve any single, isolated, non-landmark image like a house or a graffiti on a wall. Starting from an existing, geo-tagged dataset, we cluster images into sets of different views of the same scene. This is a very efficient, scalable, and fully automated mining process. We then align all views in a set to one reference image and construct a 2D scene map. Our indexing scheme operates directly on scene maps. We evaluate our solution on a challenging one million urban image dataset and provide public access to our service through our application, VIRaL.

@article{J19,

title = {{VIRaL}: Visual Image Retrieval and Localization},

author = {Kalantidis, Yannis and Tolias, Giorgos and Avrithis, Yannis and Phinikettos, Marios and Spyrou, Evaggelos and Mylonas, Phivos and Kollias, Stefanos},

journal = {Multimedia Tools and Applications (MTAP)},

volume = {51},

number = {2},

month = {1},

pages = {555--592},

year = {2011}

}2009

24(7):557-571 Aug 2009

Computer vision applications often need to process only a representative part of the visual input rather than the whole image/sequence. Considerable research has been carried out into salient region detection methods based either on models emulating human visual attention (VA) mechanisms or on computational approximations. Most of the proposed methods are bottom-up and their major goal is to filter out redundant visual information. In this paper, we propose and elaborate on a saliency detection model that treats a video sequence as a spatiotemporal volume and generates a local saliency measure for each visual unit (voxel). This computation involves an optimization process incorporating inter- and intra-feature competition at the voxel level. Perceptual decomposition of the input, spatiotemporal center-surround interactions and the integration of heterogeneous feature conspicuity values are described and an experimental framework for video classification is set up. This framework consists of a series of experiments that shows the effect of saliency in classification performance and let us draw conclusions on how well the detected salient regions represent the visual input. A comparison is attempted that shows the potential of the proposed method.

@article{J18,

title = {Spatiotemporal Saliency for Video Classification},

author = {Rapantzikos, Konstantinos and Tsapatsoulis, Nicolas and Avrithis, Yannis and Kollias, Stefanos},

journal = {Signal Processing: Image Communication (SP:IC)},

volume = {24},

number = {7},

month = {8},

pages = {557--571},

year = {2009}

}11(11):229-243 Feb 2009

In this paper we investigate detection of high-level concepts in multimedia content through an integrated approach of visual thesaurus analysis and visual context. In the former, detection is based on model vectors that represent image composition in terms of region types, obtained through clustering over a large data set. The latter deals with two aspects, namely high-level concepts and region types of the thesaurus, employing a model of a priori specified semantic relations among concepts and automatically extracted topological relations among region types; thus it combines both conceptual and topological context. A set of algorithms is presented, which modify either the confidence values of detected concepts, or the model vectors based on which detection is performed. Visual context exploitation is evaluated on TRECVID and Corel data sets and compared to a number of related visual thesaurus approaches.

@article{J17,

title = {Using Visual Context and Region Semantics for High-Level Concept Detection},

author = {Mylonas, Phivos and Spyrou, Evaggelos and Avrithis, Yannis and Kollias, Stefanos},

journal = {IEEE Transactions on Multimedia (TMM)},

volume = {11},

number = {11},

month = {2},

pages = {229--243},

year = {2009}

}41(3):337-373 Feb 2009

This paper presents a video analysis approach based on concept detection and keyframe extraction employing a visual thesaurus representation. Color and texture descriptors are extracted from coarse regions of each frame and a visual thesaurus is constructed after clustering regions. The clusters, called region types, are used as basis for representing local material information through the construction of a model vector for each frame, which reflects the composition of the image in terms of region types. Model vector representation is used for keyframe selection either in each video shot or across an entire sequence. The selection process ensures that all region types are represented. A number of high-level concept detectors is then trained using global annotation and Latent Semantic Analysis is applied. To enhance detection performance per shot, detection is employed on the selected keyframes of each shot, and a framework is proposed for working on very large data sets.

@article{J16,

title = {Concept detection and keyframe extraction using a visual thesaurus},

author = {Spyrou, Evaggelos and Tolias, Giorgos and Mylonas, Phivos and Avrithis, Yannis},

journal = {Multimedia Tools and Applications (MTAP)},

volume = {41},

number = {3},

month = {2},

pages = {337--373},

year = {2009}

}2008

39(3):293-327 Sep 2008

In this paper we present a framework for unified, personalized access to heterogeneous multimedia content in distributed repositories. Focusing on semantic analysis of multimedia documents, metadata, user queries and user profiles, it contributes to the bridging of the gap between the semantic nature of user queries and raw multimedia documents. The proposed approach utilizes as input visual content analysis results, as well as analyzes and exploits associated textual annotation, in order to extract the underlying semantics, construct a semantic index and classify documents to topics, based on a unified knowledge and semantics representation model. It may then accept user queries, and, carrying out semantic interpretation and expansion, retrieve documents from the index and rank them according to user preferences, similarly to text retrieval. All processes are based on a novel semantic processing methodology, employing fuzzy algebra and principles of taxonomic knowledge representation. Part I of this work presented in this paper deals with data and knowledge models, manipulation of multimedia content annotations and semantic indexing, while Part II will continue on the use of the extracted semantic information for personalized retrieval.

@article{J15,

title = {Semantic Representation of Multimedia Content: Knowledge Representation and Semantic Indexing},

author = {Mylonas, Phivos and Athanasiadis, Thanos and Wallace, Manolis and Avrithis, Yannis and Kollias, Stefanos},

journal = {Multimedia Tools and Applications (MTAP)},

publisher = {Springer},

volume = {39},

number = {3},

month = {9},

pages = {293--327},

year = {2008}

}23(1):73-100 Mar 2008

Context modeling has been long acknowledged as a key aspect in a wide variety of problem domains. In this paper we focus on the combination of contextualization and personalization methods to improve the performance of personalized information retrieval. The key aspects in our proposed approach are a) the explicit distinction between historic user context and live user context, b) the use of ontology-driven representations of the domain of discourse, as a common, enriched representational ground for content meaning, user interests, and contextual conditions, enabling the definition of effective means to relate the three of them, and c) the introduction of fuzzy representations as an instrument to properly handle the uncertainty and imprecision involved in the automatic interpretation of meanings, user attention, and user wishes. Based on a formal grounding at the representational level, we propose methods for the automatic extraction of persistent semantic user preferences, and live, ad-hoc user interests, which are combined in order to improve the accuracy and reliability of personalization for retrieval.

@article{J14,

title = {Personalized information retrieval based on context and ontological knowledge},

author = {Mylonas, Phivos and Vallet, David and Castells, Pablo and Fern\'andez, Miriam and Avrithis, Yannis},

journal = {Knowledge Engineering Review (KER)},

volume = {23},

number = {1},

month = {3},

pages = {73--100},

year = {2008}

}2007

17(3):298-312 Mar 2007

In this paper we present a framework for simultaneous image segmentation and object labeling leading to automatic image annotation. Focusing on semantic analysis of images, it contributes to knowledge-assisted multimedia analysis and the bridging of the gap between its semantics and low level visual features. The proposed framework operates at semantic level using possible semantic labels, formally defined as fuzzy sets, to make decisions on handling image regions instead of visual features used traditionally. In order to stress its independence of a specific image segmentation approach we have modified two well known region growing algorithms, i.e. watershed and recursive shortest spanning tree, and compared them with their traditional counterparts. Additionally, a visual context representation and analysis approach is presented, blending global knowledge in interpreting each object locally. Contextual information is based on a novel semantic processing methodology, employing fuzzy algebra and ontological taxonomic knowledge representation. In this process, utilization of contextual knowledge re-adjusts semantic region growing labeling results appropriately, by means of fine-tuning the membership degrees of detected concepts. The performance of the overall methodology is demonstrated on a real-life still image dataset from two popular domains.

@article{J13,

title = {Semantic Image Segmentation and Object Labeling},

author = {Athanasiadis, Thanos and Mylonas, Phivos and Avrithis, Yannis and Kollias, Stefanos},

journal = {IEEE Transactions on Circuits and Systems for Video Technology (CSVT)},

volume = {17},

number = {3},

month = {3},

pages = {298--312},

year = {2007}

}17(3):336-346 Mar 2007

Personalized content retrieval aims at improving the retrieval process by taking into account the particular interests of individual users. However, not all user preferences are relevant in all situations. It is well known that human preferences are complex, multiple, heterogeneous, changing, even contradictory, and should be understood in context with the user goals and tasks at hand. In this paper we propose a method to build a dynamic representation of the semantic context of ongoing retrieval tasks, which is used to activate different subsets of user interests at runtime, in a way that out–of-context preferences are discarded. Our approach is based on an ontology-driven representation of the domain of discourse, providing enriched descriptions of the semantics involved in retrieval actions and preferences, and enabling the definition of effective means to relate preferences and context.

@article{J12,

title = {Personalized Content Retrieval in Context Using Ontological Knowledge},

author = {Vallet, David and Castells, Pablo and Fern\'andez, Miriam and Mylonas, Phivos and Avrithis, Yannis},

journal = {IEEE Transactions on Circuits and Systems for Video Technology (CSVT)},

volume = {17},

number = {3},

month = {3},

pages = {336--346},

year = {2007}

}1(2):237-248 Jun 2007

A video analysis framework based on spatiotemporal saliency calculation is presented. We propose a novel scheme for generating saliency in video sequences by taking into account both the spatial extent and dynamic evolution of regions. Towards this goal we extend a common image-oriented computational model of saliency-based visual attention to handle spatiotemporal analysis of video in a volumetric framework. The main claim is that attention acts as an efficient preprocessing step of a video sequence in order to obtain a compact representation of its content in the form of salient events/objects. The model has been implemented and qualitative as well as quantitative examples illustrating its performance are shown.

@article{J10,

title = {Bottom-Up Spatiotemporal Visual Attention Model for Video Analysis},

author = {Rapantzikos, Konstantinos and Tsapatsoulis, Nicolas and Avrithis, Yannis and Kollias, Stefanos},

journal = {IET Image Processing (IP)},

volume = {1},

number = {2},

month = {6},

pages = {237--248},

year = {2007}

}2006

2(3):17-36 Jul 2006

In this article, an approach to semantic image analysis is presented. Under the proposed approach, ontologies are used to capture general, spatial, and contextual knowledge of a domain, and a genetic algorithm is applied to realize the final annotation. The employed domain knowledge considers high-level information in terms of the concepts of interest of the examined domain, contextual information in the form of fuzzy ontological relations, as well as low-level information in terms of prototypical low-level visual descriptors. To account for the inherent ambiguity in visual information, uncertainty has been introduced in the spatial relations definition. First, an initial hypothesis set of graded annotations is produced for each image region, and then context is exploited to update appropriately the estimated degrees of confidence. Finally, a genetic algorithm is applied to decide the most plausible annotation by utilizing the visual and the spatial concepts definitions included in the domain ontology. Experiments with a collection of photographs belonging to two different domains demonstrate the performance of the proposed approach.

@article{J11,

title = {Knowledge-Assisted Image Analysis Based on Context and Spatial Optimization},

author = {Papadopoulos, Georgios and Mylonas, Phivos and Mezaris, Vasileios and Avrithis, Yannis and Kompatsiaris, Ioannis},

journal = {International Journal on Semantic Web and Information Systems (SWIS)},

volume = {2},

number = {3},

month = {7},

pages = {17--36},

year = {2006}

}Special issue on Knowledge-Based Digital Media Processing

153(3):255-262 Jun 2006

Knowledge representation and annotation of multimedia documents typically have been pursued in two different directions. Previous approaches have focused either on low level descriptors, such as dominant color, or on the semantic content dimension and corresponding manual annotations, such as person or vehicle. In this paper, we present a knowledge infrastructure and a experimentation platform for semantic annotation to bridge the two directions. Ontologies are being extended and enriched to include low-level audiovisual features and descriptors. Additionally, we present a tool that allows for linking low-level MPEG-7 visual descriptions to ontologies and annotations. This way we construct ontologies that include prototypical instances of high-level domain concepts together with a formal specification of the corresponding visual descriptors. This infrastructure is exploited by a knowledge-assisted analysis framework that may handle problems like segmentation, tracking, feature extraction and matching in order to classify scenes, identify and label objects, thus automatically create the associated semantic metadata.

@article{J9,

title = {Knowledge Representation and Semantic Annotation of Multimedia Content},

author = {Petridis, Kosmas and Bloehdorn, Stephan and Saathoff, Carsten and Simou, Nikolaos and Dasiopoulou, Stamatia and Tzouvaras, Vassilis and Handschuh, Siegfried and Avrithis, Yannis and Kompatsiaris, Ioannis and Staab, Steffen},

journal = {IEE Proceedings on Vision, Image and Signal Processing (VISP) (Special Issue on Knowledge-Based Digital Media Processing)},

volume = {153},

number = {3},

month = {6},

pages = {255--262},

year = {2006}

}157(3):341-372 Feb 2006

The property of transitivity is one of the most important for fuzzy binary relations, especially in the cases when they are used for the representation of real life similarity or ordering information. As far as the algorithmic part of the actual calculation of the transitive closure of such relations is concerned, works in the literature mainly focus on crisp symmetric relations, paying little attention to the case of general fuzzy binary relations. Most works that deal with the algorithmic part of the transitive closure of fuzzy relations only focus on the case of max-min transitivity, disregarding other types of transitivity. In this paper, after formalizing the notion of sparseness and providing a representation model for sparse relations that displays both computational and storage merits, we propose an algorithm for the incremental update of fuzzy sup-t transitive relations. The incremental transitive update (ITU) algorithm achieves the re-establishment of transitivity when an already transitive relation is only locally disturbed. Based on this algorithm, we propose an extension to handle the sup-t transitive closure of any fuzzy binary relation, through a novel incremental transitive closure (ITC) algorithm. The ITU and ITC algorithms can be applied on any fuzzy binary relation and t-norm; properties such as reflexivity, symmetricity and idempotency are not a requirement. Under the specified assumptions for the average sparse relation, both of the proposed algorithms have considerably smaller computational complexity than the conventional approach; this is both established theoretically and verified via appropriate computing experiments.

@article{J8,

title = {Computationally efficient {sup-$t$} transitive closure for sparse fuzzy binary relations},

author = {Wallace, Manolis and Avrithis, Yannis and Kollias, Stefanos},

journal = {Fuzzy Sets and Systems (FSS)},

volume = {157},

number = {3},

month = {2},

pages = {341--372},

year = {2006}

}36(1):34-52 Jan 2006

During the last few years numerous multimedia archives have made extensive use of digitized storage and annotation technologies. Still, the development of single points of access, providing common and uniform access to their data, despite the efforts and accomplishments of standardization organizations, has remained an open issue, as it involves the integration of various large scale heterogeneous and heterolingual systems. In this paper, we describe a mediator system that achieves architectural integration through an extended 3-tier architecture and content integration through semantic modeling. The described system has successfully integrated five multimedia archives, quite different in nature and content from each other, while also providing for easy and scalable inclusion of more archives in the future.

@article{J7,

title = {Integrating Multimedia Archives: The Architecture and the Content Layer},

author = {Wallace, Manolis and Athanasiadis, Thanos and Avrithis, Yannis and Delopoulos, Anastasios and Kollias, Stefanos},

journal = {IEEE Transactions on Systems, Man, and Cybernetics, Part A: Systems and Humans (SMC-A)},

volume = {36},

number = {1},

month = {1},

pages = {34--52},

year = {2006}

}2003

9(6):510-519 Jun 2003

In this paper, an integrated information system is presented that offers enhanced search and retrieval capabilities to users of heterogeneous digital audiovisual (a/v) archives. This innovative system exploits the advances in handling a/v content and related metadata, as introduced by MPEG-4 and worked out by MPEG-7, to offer advanced services characterized by the tri-fold "semantic phrasing of the request (query)", "unified handling" and "personalized response". The proposed system is targeting the intelligent extraction of semantic information from a/v and text related data taking into account the nature of the queries that users my issue, and the context determined by user profiles. It also provides a personalization process of the response in order to provide end-users with desired information. From a technical point of view, the FAETHON system plays the role of an intermediate access server residing between the end users and multiple heterogeneous audiovisual archives organized according to the new MPEG standards.

@article{J6,

title = {Unified Access to Heterogeneous Audiovisual Archives},

author = {Avrithis, Yannis and Stamou, Giorgos and Wallace, Manolis and Marques, Ferran and Salembier, Philippe and Giro, Xavier and Haas, Werner and Vallant, Heribert and Zufferey, Michael},

journal = {Journal of Universal Computer Science (JUCS)},

volume = {9},

number = {6},

month = {6},

pages = {510--519},

year = {2003}

}2001

Special issue on Image Indexation

4(2-3):93-107 Jun 2001

Pictures and video sequences showing human faces are of high importance in content-based retrieval systems, and consequently face detection has been established as an important tool in the framework of many multimedia applications like indexing, scene classification and news summarisation. In this work, we combine skin colour and shape features with template matching in an efficient way for the purpose of facial image indexing. We propose an adaptive two-dimensional Gaussian model of the skin colour distribution whose parameters are re-estimated based on the current image or frame, reducing generalisation problems. Masked areas obtained from skin colour detection are processed using morphological tools and assessed using global shape features. The verification stage is based on a template matching variation providing robust detection. Facial images and video sequences are indexed according to the number of included faces, their average colour components and their scale, leading to new types of content-based retrieval criteria in query-by-example frameworks. Experimental results have shown that the proposed implementation combines efficiency, robustness and speed, and could be easily embedded in generic visual information retrieval systems or video databases.

@article{J5,

title = {Facial Image Indexing in Multimedia Databases},

author = {Tsapatsoulis, Nicolas and Avrithis, Yannis and Kollias, Stefanos},

journal = {Pattern Analysis and Applications (PAA) (Special Issue on Image Indexation)},

volume = {4},

number = {2--3},

month = {6},

pages = {93--107},

year = {2001}

}13(2):80-94 Nov 2001

A novel method for two-dimensional curve normalization with respect to affine transformations is presented in this paper, which allows an affine-invariant curve representation to be obtained without any actual loss of information on the original curve. It can be applied as a preprocessing step to any shape representation, classification, recognition, or retrieval technique, since it effectively decouples the problem of affine-invariant description from feature extraction and pattern matching. Curves estimated from object contours are first modeled by cubic B-splines and then normalized in several steps in order to eliminate translation, scaling, skew, starting point, rotation, and reflection transformations, based on a combination of curve features including moments and Fourier descriptors.

@article{J4,

title = {Affine-Invariant Curve Normalization for Object Shape Representation, Classification, and Retrieval},

author = {Avrithis, Yannis and Xirouhakis, Yiannis and Kollias, Stefanos},

journal = {Machine Vision and Applications (MVA)},

volume = {13},

number = {2},

month = {11},

pages = {80--94},

year = {2001}

}2000

Special issue on Fuzzy Logic in Signal Processing

80(6):1049-1067 Jun 2000

In this paper, a fuzzy representation of visual content is proposed, which is useful for the new emerging multimedia applications, such as content-based image indexing and retrieval, video browsing and summarization. In particular, a multidimensional fuzzy histogram is constructed for each video frame based on a collection of appropriate features, extracted using video sequence analysis techniques. This approach is then applied both for video summarization, in the context of a content-based sampling algorithm, and for content-based indexing and retrieval. In the first case, video summarization is accomplished by discarding shots or frames of similar visual content so that only a small but meaningful amount of information is retained (key-frames). In the second case, a content-based retrieval scheme is investigated, so that the most similar images to a query are extracted. Experimental results and comparison with other known methods are presented to indicate the good performance of the proposed scheme on real-life video recordings.

@article{J3,

title = {A Fuzzy Video Content Representation for Video Summarization and Content-Based Retrieval},

author = {Doulamis, Anastasios and Doulamis, Nikolaos and Avrithis, Yannis and Kollias, Stefanos},

journal = {Signal Processing (SP) (Special Issue on Fuzzy Logic in Signal Processing)},

volume = {80},

number = {6},

month = {6},

pages = {1049--1067},

year = {2000}

}Special issue on {3D} Video Technology

10(4):501-517 Jun 2000

An efficient technique for summarization of stereoscopic video sequences is presented in this paper, which extracts a small but meaningful set of video frames using a content-based sampling algorithm. The proposed video-content representation provides the capability of browsing digital stereoscopic video sequences and performing more efficient content-based queries and indexing. Each stereoscopic video sequence is first partitioned into shots by applying a shot-cut detection algorithm so that frames (or stereo pairs) of similar visual characteristics are gathered together. Each shot is then analyzed using stereo-imaging techniques, and the disparity field, occluded areas, and depth map are estimated. A multiresolution implementation of the Recursive Shortest Spanning Tree (RSST) algorithm is applied for color and depth segmentation, while fusion of color and depth segments is employed for reliable video object extraction. In particular, color segments are projected onto depth segments so that video objects on the same depth plane are retained, while at the same time accurate object boundaries are extracted. Feature vectors are then constructed using multidimensional fuzzy classification of segment features including size, location, color, and depth. Shot selection is accomplished by clustering similar shots based on the generalized Lloyd-Max algorithm, while for a given shot, key frames are extracted using an optimization method for locating frames of minimally correlated feature vectors. For efficient implementation of the latter method, a genetic algorithm is used. Experimental results are presented, which indicate the reliable performance of the proposed scheme on real-life stereoscopic video sequences.

@article{J2,

title = {Efficient Summarization of Stereoscopic Video Sequences},

author = {Doulamis, Nikolaos and Doulamis, Anastasios and Avrithis, Yannis and Ntalianis, Klimis and Kollias, Stefanos},

journal = {IEEE Transactions on Circuits and Systems for Video Technology (CSVT) (Special Issue on {3D} Video Technology)},

volume = {10},

number = {4},

month = {6},

pages = {501--517},

year = {2000}

}1999

Special issue on Content-Based Access of Image and Video Libraries

75(1-2):3-24 Jul 1999

A video content representation framework is proposed in this paper for extracting limited, but meaningful, information of video data, directly from the MPEG compressed domain. A hierarchical color and motion segmentation scheme is applied to each video shot, transforming the frame-based representation to a feature-based one. The scheme is based on a multiresolution implementation of the recursive shortest spanning tree (RSST) algorithm. Then, all segment features are gathered together using a fuzzy multidimensional histogram to reduce the possibility of classifying similar segments to different classes. Extraction of several key frames is performed for each shot in a content-based rate-sampling framework. Two approaches are examined for key frame extraction. The first is based on examination of the temporal variation of the feature vector trajectory; the second is based on minimization of a cross-correlation criterion of the video frames. For efficient implementation of the latter approach, a logarithmic search (along with a stochastic version) and a genetic algorithm are proposed. Experimental results are presented which illustrate the performance of the proposed techniques, using synthetic and real life MPEG video sequences.

@article{J1,

title = {A Stochastic Framework for Optimal Key Frame Extraction from {MPEG} Video Databases},

author = {Avrithis, Yannis and Doulamis, Anastasios and Doulamis, Nikolaos and Kollias, Stefanos},

journal = {Computer Vision and Image Understanding (CVIU) (Special Issue on Content-Based Access of Image and Video Libraries)},

volume = {75},

number = {1--2},

month = {7},

pages = {3--24},

year = {1999}

}Conference proceedings

2025

Tucson, AZ, US Mar 2025

This work addresses composed image retrieval in the context of domain conversion, where the content of the query image is to be retrieved in a domain given by the query text. We show that a strong generic vision-language model already provides sufficient descriptive power and no further learning is necessary. The query image is mapped to the input text space by textual inversion. In contrast to the common practice of inverting to the continuous space of textual tokens, we opt for inversion into the discrete space of words using a text vocabulary. This distinction is empirically validated and proven to be a pivotal factor. Through inversion, we represent the image by soft assignment to the vocabulary. Such a text description is made more robust by engaging a set of images visually similar to the query image. Images are retrieved via a weighted ensemble of text queries, each composed of one of the words assigned to the query image and the domain query text. Our method outperforms prior art by a large margin on standard as well as on newly introduced benchmarks.

@conference{C138,

title = {Composed Image Retrieval for Training-Free Domain Conversion},

author = {Efthymiadis, Nikos and Psomas, Bill and Laskar, Zakaria and Karantzalos, Konstantinos and Avrithis, Yannis and Chum, Ondrej and Tolias, Giorgos},

booktitle = {Proceedings of IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {3},

address = {Tucson, AZ, US},

year = {2025}

}2024

part of IEEE Conference on Computer Vision and Pattern Recognition

Seattle, WA, US Jun 2024

Explanations obtained from transformer-based architectures, in the form of raw attention, can be seen as a class agnostic saliency map. Additionally, attention-based pooling serves as a form of masking in feature space. Motivated by this observation, we design an attention-based pooling mechanism intended to replace global average pooling during inference. This mechanism, called Cross Attention Stream (CA-Stream), comprises a stream of cross attention blocks interacting with features at different network levels. CA-Stream enhances interpretability properties in existing image recognition models, while preserving their recognition properties.

@conference{C137,

title = {{CA}-Stream: Attention-based pooling for interpretable image recognition},

author = {Torres Figueroa, Felipe and Zhang, Hanwei and Sicre, Ronan and Ayache, Stephane and Avrithis, Yannis},

booktitle = {Proceedings of 3rd Workshop on Explainable AI for Computer Vision (XAI4CV), part of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {6},

address = {Seattle, WA, US},

year = {2024}

}Athens, Greece Jul 2024

The surge in data volume within the field of remote sensing has necessitated efficient methods for retrieving relevant information from extensive image archives. Conventional unimodal queries, whether visual or textual, are insufficient and restrictive. To address this limitation, we introduce the task of composed image retrieval in remote sensing, allowing users to combine query images with a textual part that modifies attributes such as color, texture, context, or more, thereby enhancing the expressivity of the query.

We demonstrate that a vision-language model possesses sufficient descriptive power and, when coupled with the proposed fusion method, eliminates the necessity for further learning. We present a new evaluation benchmark focused on shape, color, density, and quantity modifications. Our work not only sets the state-of-the-art for this task, but also serves as a foundational step in addressing a gap in the field of remote sensing image retrieval.

@conference{C136,

title = {Composed Image Retrieval for Remote Sensing},

author = {Psomas, Bill and Kakogeorgiou, Ioannis and Efthymiadis, Nikos and Tolias, Giorgos and Chum, Ondrej and Avrithis, Yannis and Karantzalos, Konstantinos},

booktitle = {Proceedings of IEEE International Geoscience and Remote Sensing Symposium (IGARSS) (Oral)},

month = {7},

address = {Athens, Greece},

year = {2024}

}Seattle, WA, US Jun 2024

How important is it for training and evaluation sets to not have class overlap in image retrieval? We revisit Google Landmarks v2 clean, the most popular training set, by identifying and removing class overlap with Revisited Oxford and Paris, the most popular evaluation set. By comparing the original and the new $\mathcal{R}$GLDv2-clean on a benchmark of reproduced state-of-the-art methods, our findings are striking. Not only is there a dramatic drop in performance, but it is inconsistent across methods, changing the ranking.

What does it take to focus on objects or interest and ignore background clutter when indexing? Do we need to train an object detector and the representation separately? Do we need location supervision? We introduce Single-stage Detect-to-Retrieve (CiDeR), an end-to-end, single-stage pipeline to detect objects of interest and extract a global image representation. We outperform previous state-of-the-art on both existing training sets and the new $\mathcal{R}$GLDv2-clean. Our dataset is available at https://github.com/dealicious-inc/RGLDv2-clean.

@conference{C135,

title = {On Train-Test Class Overlap and Detection for Image Retrieval},

author = {Song, Chull Hwan and Yoon, Jooyoung and Hwang, Taebaek and Choi, Shunghyun and Gu, Yeong Hyeon and Avrithis, Yannis},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {6},

address = {Seattle, WA, US},

year = {2024}

}Vienna, Austria May 2024

Self-supervised learning has unlocked the potential of scaling up pretraining to billions of images, since annotation is unnecessary. But are we making the best use of data? How more economical can we be? In this work, we attempt to answer this question by making two contributions. First, we investigate first-person videos and introduce a "Walking Tours" dataset. These videos are high-resolution, hours-long, captured in a single uninterrupted take, depicting a large number of objects and actions with natural scene transitions. They are unlabeled and uncurated, thus realistic for self-supervision and comparable with human learning.

Second, we introduce a novel self-supervised image pretraining method tailored for learning from continuous videos. Existing methods typically adapt image-based pretraining approaches to incorporate more frames. Instead, we advocate a "tracking to learn to recognize" approach. Our method called DoRA, leads to attention maps that DiscOver and tRAck objects over time in an end-to-end manner, using transformer cross-attention. We derive multiple views from the tracks and use them in a classical self-supervised distillation loss. Using our novel approach, a single Walking Tours video remarkably becomes a strong competitor to ImageNet for several image and video downstream tasks.

@conference{C134,

title = {Is ImageNet worth 1 video? Learning strong image encoders from 1 long unlabelled video},

author = {Venkataramanan, Shashanka and Rizve, Mamshad Nayeem and Carreira, Jo\~ao and Asano, Yuki M. and Avrithis, Yannis},

booktitle = {Proceedings of International Conference on Learning Representations (ICLR) (Oral). Outstanding Paper Honorable Mention},

month = {5},

address = {Vienna, Austria},

year = {2024}

}Rome, Italy Feb 2024

This paper studies interpretability of convolutional networks by means of saliency maps. Most approaches based on Class Activation Maps (CAM) combine information from fully connected layers and gradient through variants of backpropagation. However, it is well understood that gradients are noisy and alternatives like guided backpropagation have been proposed to obtain better visualization at inference. In this work, we present a novel training approach to improve the quality of gradients for interpretability. In particular, we introduce a regularization loss such that the gradient with respect to the input image obtained by standard backpropagation is similar to the gradient obtained by guided backpropagation. We find that the resulting gradient is qualitatively less noisy and improves quantitatively the interpretability properties of different networks, using several interpretability methods.

@conference{C133,

title = {A Learning Paradigm for Interpretable Gradients},

author = {Torres Figueroa, Felipe and Zhang, Hanwei and Sicre, Ronan and Avrithis, Yannis and Ayache, Stephane},

booktitle = {Proceedings of International Conference on Computer Vision Theory and Applications (VISAPP) (Oral)},

month = {2},

address = {Rome, Italy},

year = {2024}

}Waikoloa, HI, US Jan 2024

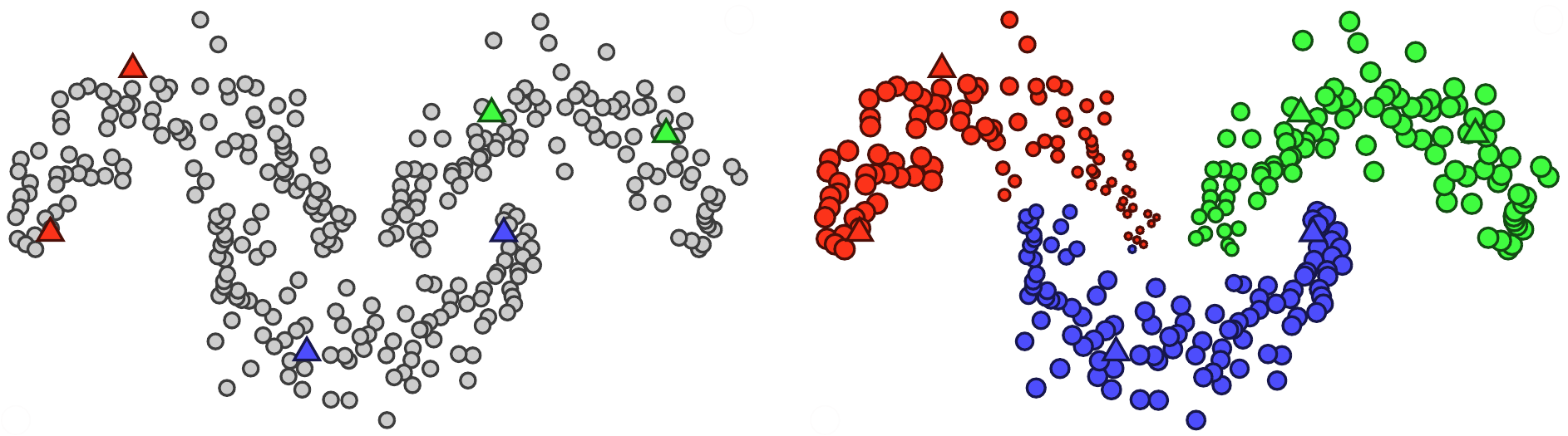

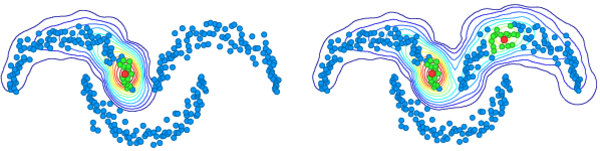

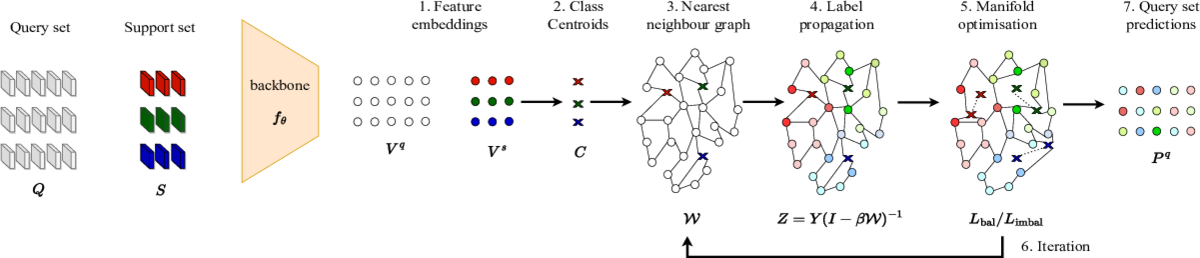

Transductive few-shot learning algorithms have showed substantially superior performance over their inductive counterparts by leveraging the unlabeled queries at inference. However, the vast majority of transductive methods are evaluated on perfectly class-balanced benchmarks. It has been shown that they undergo remarkable drop in performance under a more realistic, imbalanced setting.

To this end, we propose a novel algorithm to address imbalanced transductive few-shot learning, named Adaptive Manifold. Our algorithm exploits the underlying manifold of the labeled examples and unlabeled queries by using manifold similarity to predict the class probability distribution of every query. It is parameterized by one centroid per class and a set of manifold parameters that determine the manifold. All parameters are optimized by minimizing a loss function that can be tuned towards class-balanced or imbalanced distributions. The manifold similarity shows substantial improvement over Euclidean distance, especially in the 1-shot setting.

Our algorithm outperforms all other state of the art methods in three benchmark datasets, namely miniImageNet, tieredImageNet and CUB, and two different backbones, namely ResNet-18, WideResNet-28-10. In certain cases, our algorithm outperforms the previous state of the art by as much as 4.2%.

@conference{C132,

title = {Adaptive manifold for imbalanced transductive few-shot learning},

author = {Lazarou, Michalis and Avrithis, Yannis and Stathaki, Tania},

booktitle = {Proceedings of IEEE Winter Conference on Applications of Computer Vision (WACV)},

month = {1},

address = {Waikoloa, HI, US},

year = {2024}

}2023

New Orleans, LA, US Dec 2023

Mixup refers to interpolation-based data augmentation, originally motivated as a way to go beyond empirical risk minimization (ERM). Its extensions mostly focus on the definition of interpolation and the space (input or embedding) where it takes place, while the augmentation process itself is less studied. In most methods, the number of generated examples is limited to the mini-batch size and the number of examples being interpolated is limited to two (pairs), in the input space.

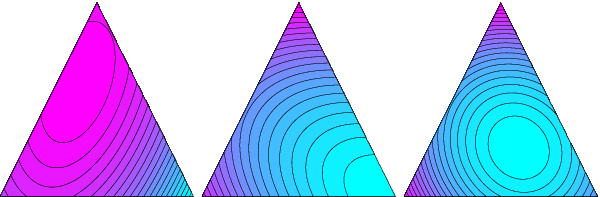

We make progress in this direction by introducing MultiMix, which generates an arbitrarily large number of interpolated examples beyond the mini-batch size, and interpolates the entire mini-batch in the embedding space. Effectively, we sample on the entire convex hull of the mini-batch rather than along linear segments between pairs of examples.

On sequence data we further extend to Dense MultiMix. We densely interpolate features and target labels at each spatial location and also apply the loss densely. To mitigate the lack of dense labels, we inherit labels from examples and weight interpolation factors by attention as a measure of confidence.

Overall, we increase the number of loss terms per mini-batch by orders of magnitude at little additional cost. This is only possible because of interpolating in the embedding space. We empirically show that our solutions yield significant improvement over state-of-the-art mixup methods on four different benchmarks, despite interpolation being only linear. By analyzing the embedding space, we show that the classes are more tightly clustered and uniformly spread over the embedding space, thereby explaining the improved behavior.

@conference{C131,

title = {Embedding Space Interpolation Beyond Mini-Batch, Beyond Pairs and Beyond Examples},

author = {Venkataramanan, Shashanka and Kijak, Ewa and Amsaleg, Laurent and Avrithis, Yannis},

booktitle = {Proceedings of Conference on Neural Information Processing Systems (NeurIPS)},

month = {12},

address = {New Orleans, LA, US},

year = {2023}

}part of International Conference on Computer Vision

Paris, France Oct 2023

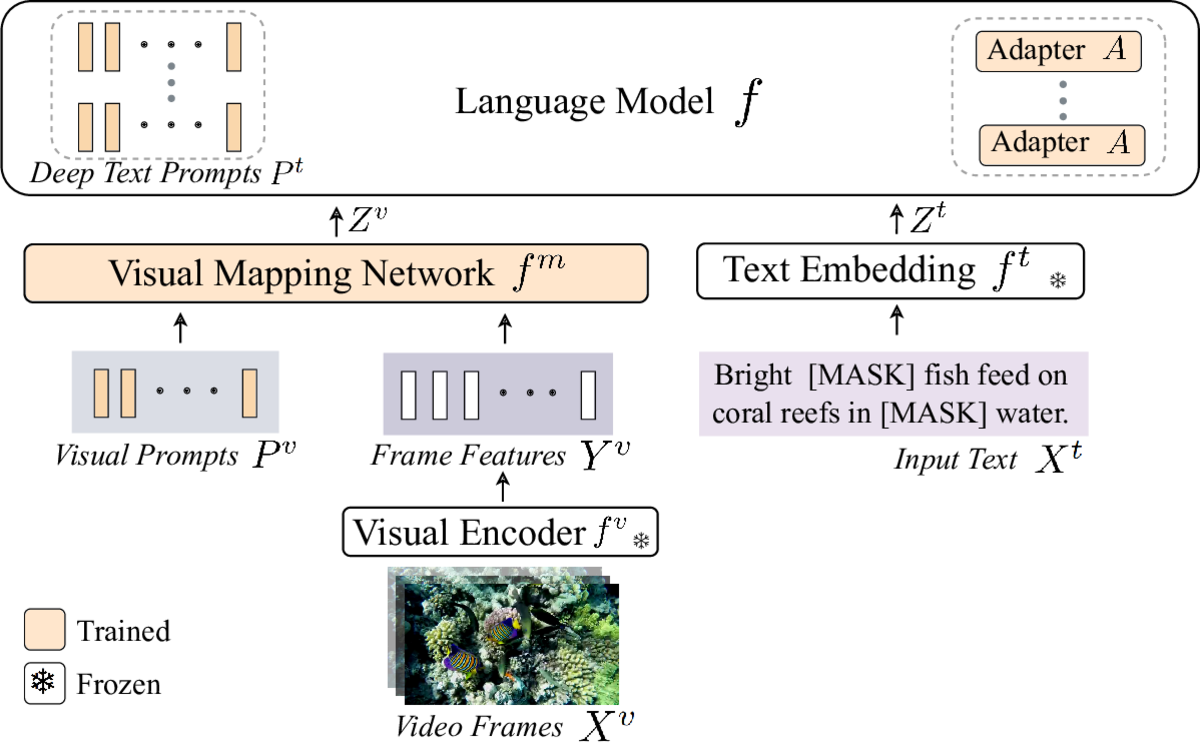

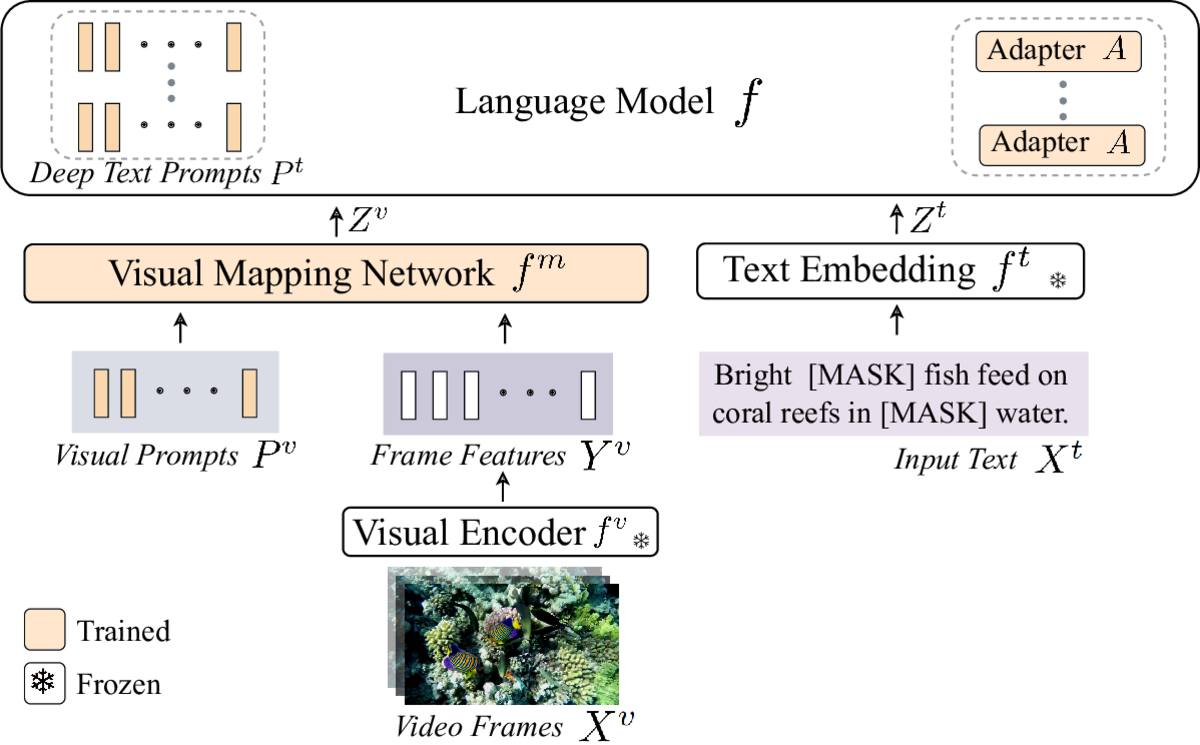

Recent vision-language models are driven by large-scale pretrained models. However, adapting pretrained models on limited data presents challenges such as overfitting, catastrophic forgetting, and the cross-modal gap between vision and language. We introduce a parameter-efficient method to address these challenges, combining multimodal prompt learning and a transformer-based mapping network, while keeping the pretrained models frozen. Our experiments on several video question answering benchmarks demonstrate the superiority of our approach in terms of performance and parameter efficiency on both zero-shot and few-shot settings. Our code is available at https://engindeniz.github.io/vitis.

@conference{C130,

title = {Zero-Shot and Few-Shot Video Question Answering with Multi-Modal Prompts},

author = {Engin, Deniz and Avrithis, Yannis},

booktitle = {Proceedings of 5th Workshop on Closing the Loop Between Vision and Language (CLVL), part of International Conference on Computer Vision (ICCV)},

month = {10},

address = {Paris, France},

year = {2023}

}Paris, France Oct 2023

Convolutional networks and vision transformers have different forms of pairwise interactions, pooling across layers and pooling at the end of the network. Does the latter really need to be different? As a by-product of pooling, vision transformers provide spatial attention for free, but this is most often of low quality unless self-supervised, which is not well studied. Is supervision really the problem? In this work, we develop a generic pooling framework and then we formulate a number of existing methods as instantiations. By discussing the properties of each group of methods, we derive SimPool, a simple attention-based pooling mechanism as a replacement of the default one for both convolutional and transformer encoders. We find that, whether supervised or self-supervised, this improves performance on pre-training and downstream tasks and provides attention maps delineating object boundaries in all cases. One could thus call SimPool universal. To our knowledge, we are the first to obtain attention maps in supervised transformers of at least as good quality as self-supervised, without explicit losses or modifying the architecture. Code at: https://github.com/billpsomas/simpool.

@conference{C129,

title = {Keep It {SimPool}: Who Said Supervised Transformers Suffer from Attention Deficit?},

author = {Psomas, Bill and Kakogeorgiou, Ioannis and Karantzalos, Konstantinos and Avrithis, Yannis},

booktitle = {Proceedings of International Conference on Computer Vision (ICCV)},

month = {10},

address = {Paris, France},

year = {2023}

}Kuala Lumpur, Malaysia Oct 2023

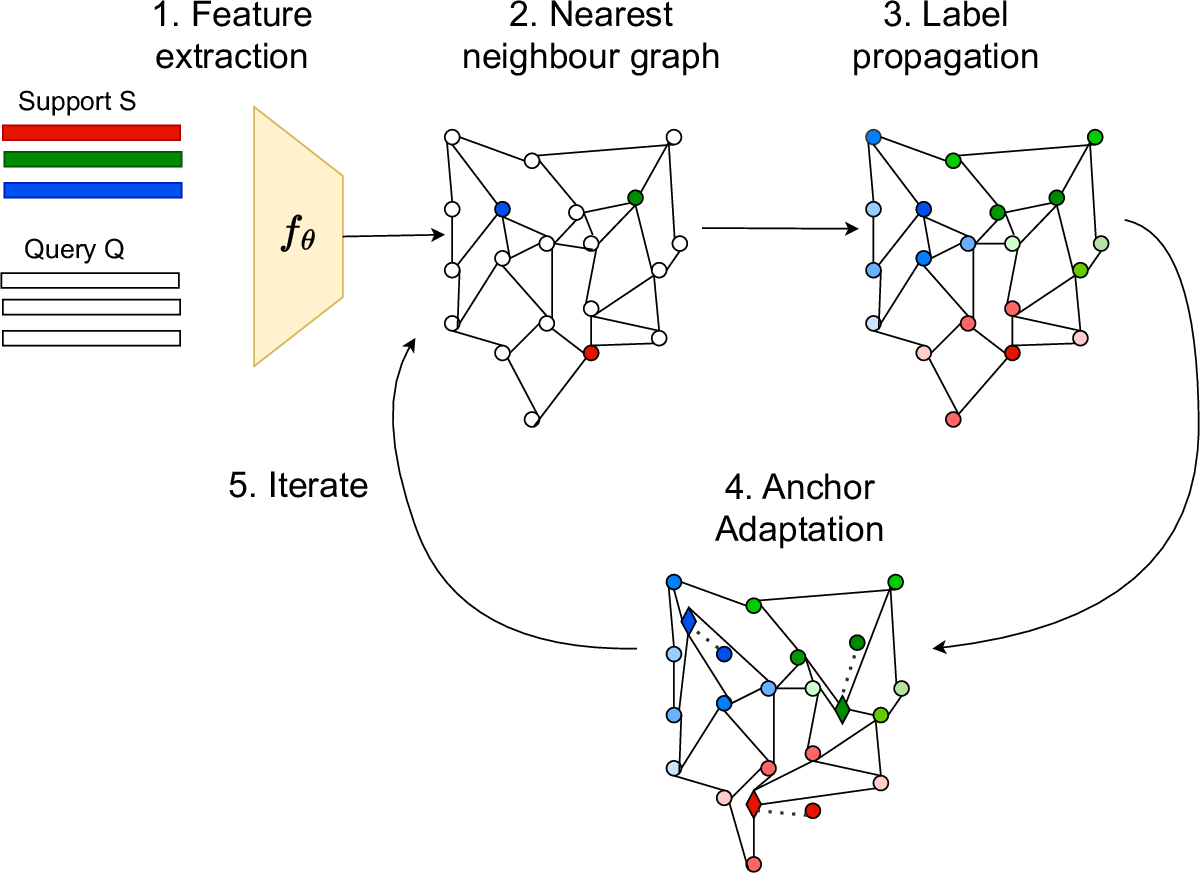

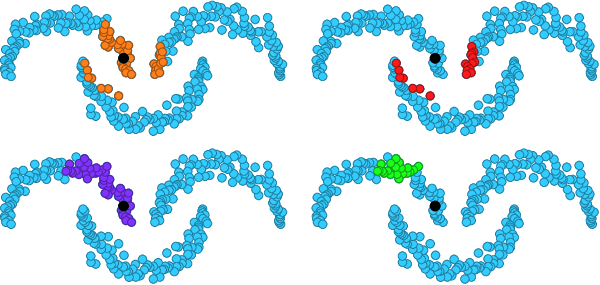

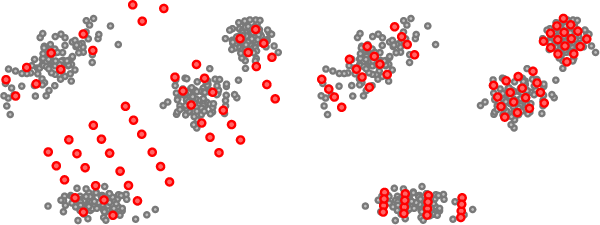

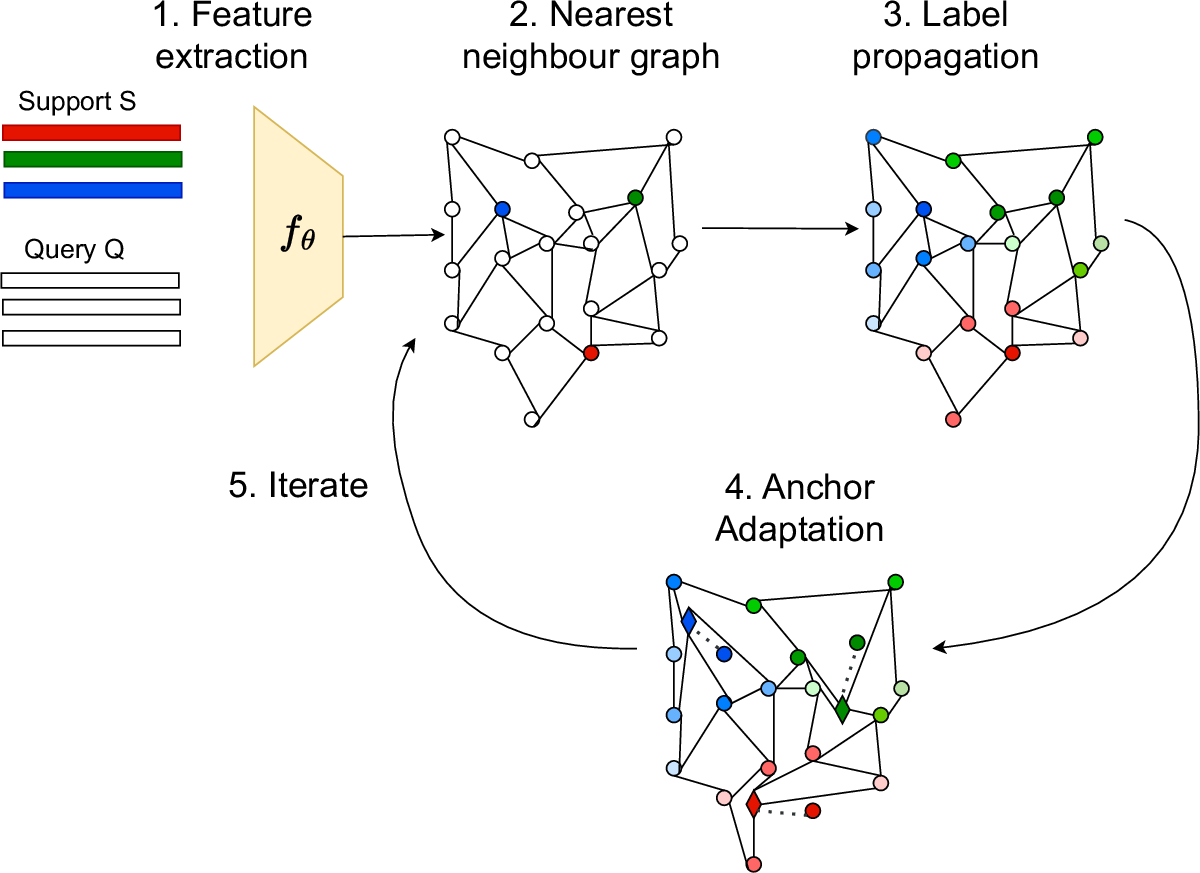

Few-shot learning addresses the issue of classifying images using limited labeled data. Exploiting unlabeled data through the use of transductive inference methods such as label propagation has been shown to improve the performance of few-shot learning significantly. Label propagation infers pseudo-labels for unlabeled data by utilizing a constructed graph that exploits the underlying manifold structure of the data. However, a limitation of the existing label propagation approaches is that the positions of all data points are fixed and might be sub-optimal so that the algorithm is not as effective as possible. In this work, we propose a novel algorithm that adapts the feature embeddings of the labeled data by minimizing a differentiable loss function optimizing their positions in the manifold in the process. Our novel algorithm, Adaptive Anchor Label Propagation, outperforms the standard label propagation algorithm by as much as 7% and 2% in the 1-shot and 5-shot settings respectively. We provide experimental results highlighting the merits of our algorithm on four widely used few-shot benchmark datasets, namely miniImageNet, tieredImageNet, CUB and CIFAR-FS and two commonly used backbones, ResNet12 and WideResNet-28-10. The source code can be found at https://github.com/MichalisLazarou/A2LP.

@conference{C128,

title = {Adaptive Anchors Label Propagation for Transductive Few-Shot Learning},

author = {Lazarou, Michalis and Avrithis, Yannis and Ren, Guangyu and Stathaki, Tania},

booktitle = {Proceedings of International Conference on Image Processing (ICIP)},

month = {10},

address = {Kuala Lumpur, Malaysia},

year = {2023}

}Vancouver, Canada Jun 2023

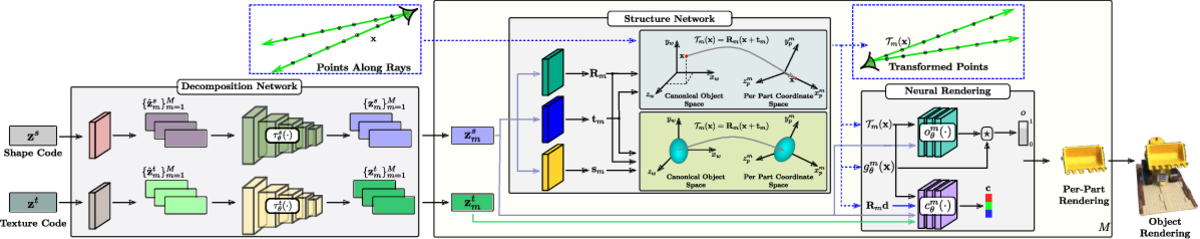

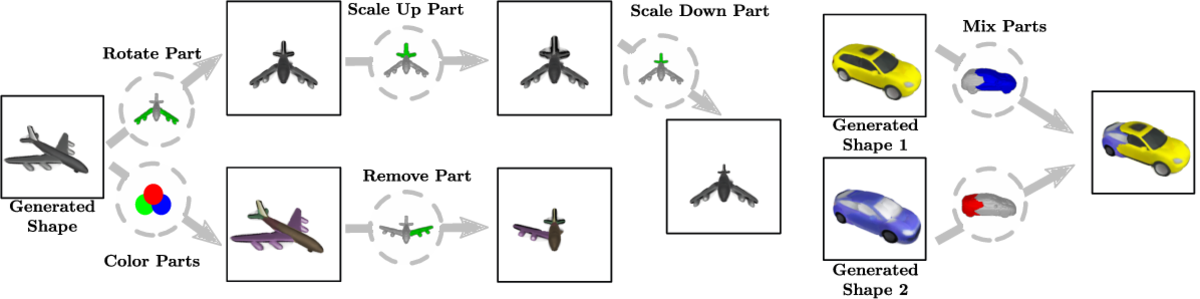

Impressive progress in generative models and implicit representations gave rise to methods that can generate 3D shapes of high quality. However, being able to locally control and edit shapes is another essential property that can unlock several content creation applications. Local control can be achieved with part-aware models, but existing methods require 3D supervision and cannot produce textures. In this work, we devise PartNeRF, a novel part-aware generative model for editable 3D shape synthesis that does not require any explicit 3D supervision. Our model generates objects as a set of locally defined NeRFs, augmented with an affine transformation. This enables several editing operations such as applying transformations on parts, mixing parts from different objects etc. To ensure distinct, manipulable parts we enforce a hard assignment of rays to parts that makes sure that the color of each ray is only determined by a single NeRF. As a result, altering one part does not affect the appearance of the others. Evaluations on various ShapeNet categories demonstrate the ability of our model to generate editable 3D objects of improved fidelity, compared to previous part-based generative approaches that require 3D supervision or models relying on NeRFs.

@conference{C127,

title = {Generating Part-Aware Editable 3D Shapes Without 3D Supervision},

author = {Tertikas, Konstantinos and Paschalidou, Despoina and Pan, Boxiao and Park, Jeong Joon and Uy, Mikaela Angelina and Emiris, Ioannis and Avrithis, Yannis and Guibas, Leonidas},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {6},

address = {Vancouver, Canada},

year = {2023}

}Waikoloa, HI, US Jan 2023

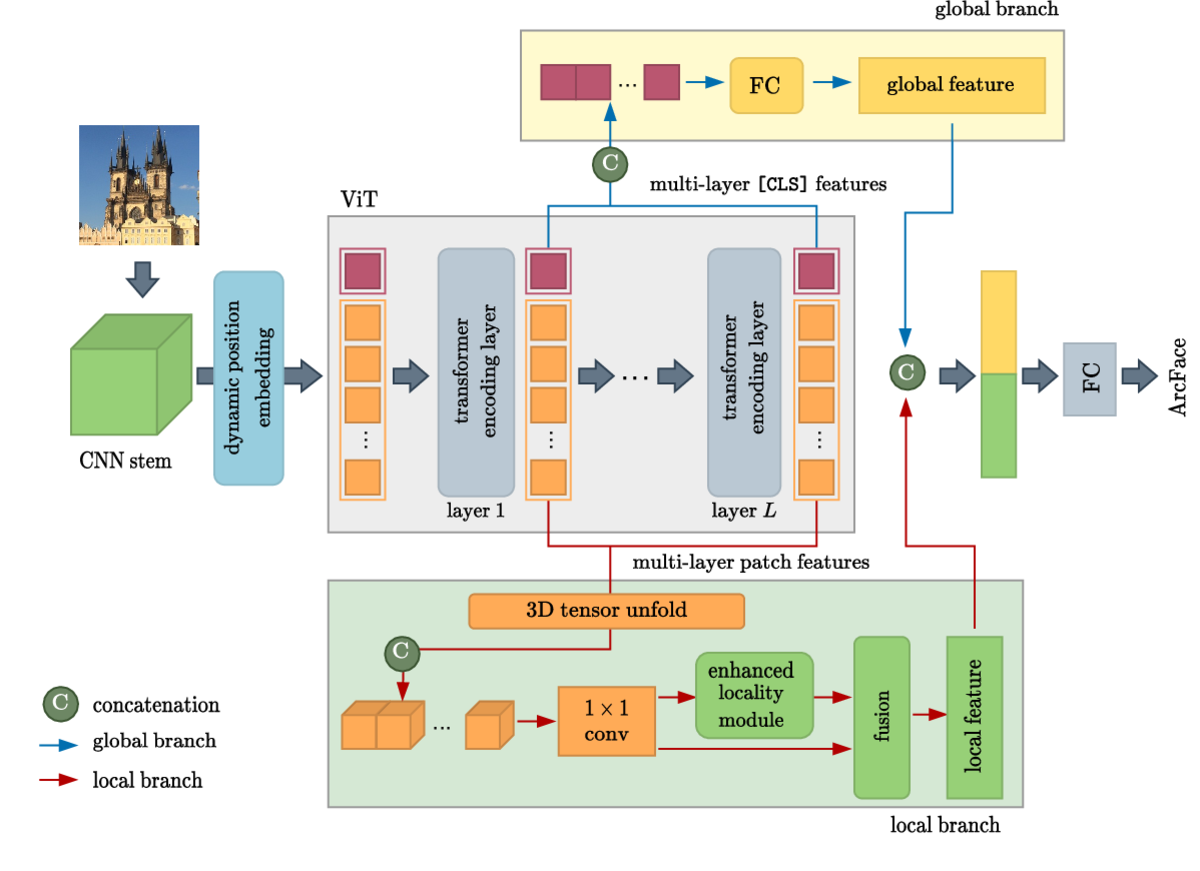

Vision transformers have achieved remarkable progress in vision tasks such as image classification and detection. However, in instance-level image retrieval, transformers have not yet shown good performance compared to convolutional networks. We propose a number of improvements that make transformers outperform the state of the art for the first time. (1) We show that a hybrid architecture is more effective than plain transformers, by a large margin. (2) We introduce two branches collecting global (classification token) and local (patch tokens) information, from which we form a global image representation. (3) In each branch, we collect multi-layer features from the transformer encoder, corresponding to skip connections across distant layers. (4) We enhance locality of interactions at the deeper layers of the encoder, which is the relative weakness of vision transformers. We train our model on all commonly used training sets and, for the first time, we make fair comparisons separately per training set. In all cases, we outperform previous models based on global representation. Public code is available at https://github.com/dealicious-inc/DToP.

@conference{C126,

title = {Boosting vision transformers for image retrieval},

author = {Song, Chull Hwan and Yoon, Jooyoung and Choi, Shunghyun and Avrithis, Yannis},

booktitle = {Proceedings of IEEE Winter Conference on Applications of Computer Vision (WACV)},

month = {1},

address = {Waikoloa, HI, US},

year = {2023}

}2022

Tel Aviv, Isreal Oct 2022

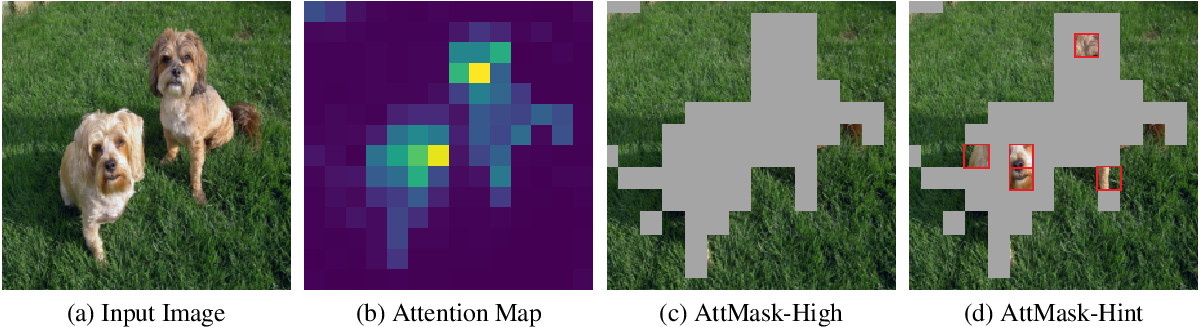

Transformers and masked language modeling are quickly being adopted and explored in computer vision as vision transformers and masked image modeling (MIM). In this work, we argue that image token masking differs from token masking in text, due to the amount and correlation of tokens in an image. In particular, to generate a challenging pretext task for MIM, we advocate a shift from random masking to informed masking. We develop and exhibit this idea in the context of distillation-based MIM, where a teacher transformer encoder generates an attention map, which we use to guide masking for the student.

We thus introduce a novel masking strategy, called attention-guided masking (AttMask), and we demonstrate its effectiveness over random masking for dense distillation-based MIM as well as plain distillation-based self-supervised learning on classification tokens. We confirm that AttMask accelerates the learning process and improves the performance on a variety of downstream tasks. We provide the implementation code at https://github.com/gkakogeorgiou/attmask.

@conference{C125,

title = {What to Hide from Your Students: Attention-Guided Masked Image Modeling},

author = {Kakogeorgiou, Ioannis and Gidaris, Spyros and Psomas, Bill and Avrithis, Yannis and Bursuc, Andrei and Karantzalos, Konstantinos and Komodakis, Nikos},

booktitle = {Proceedings of European Conference on Computer Vision (ECCV)},

month = {10},

address = {Tel Aviv, Isreal},

year = {2022}

}New Orleans, LA, US Jun 2022

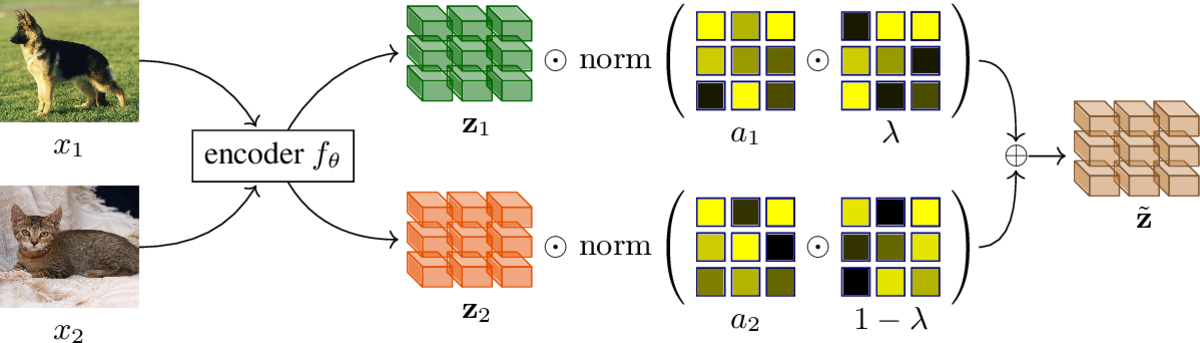

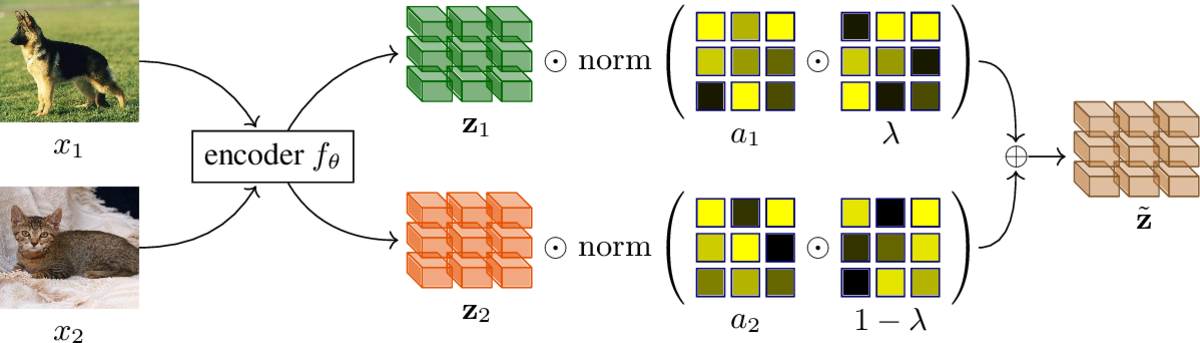

Mixup is a powerful data augmentation method that interpolates between two or more examples in the input or feature space and between the corresponding target labels. Many recent mixup methods focus on cutting and pasting two or more objects into one image, which is more about efficient processing than interpolation. However, how to best interpolate images is not well defined. In this sense, mixup has been connected to autoencoders, because often autoencoders "interpolate well", for instance generating an image that continuously deforms into another.

In this work, we revisit mixup from the interpolation perspective and introduce AlignMix, where we geometrically align two images in the feature space. The correspondences allow us to interpolate between two sets of features, while keeping the locations of one set. Interestingly, this gives rise to a situation where mixup retains mostly the geometry or pose of one image and the texture of the other, connecting it to style transfer. More than that, we show that an autoencoder can still improve representation learning under mixup, without the classifier ever seeing decoded images. AlignMix outperforms state-of-the-art mixup methods on five different benchmarks.

@conference{C124,

title = {{AlignMixup}: Improving representations by interpolating aligned features},

author = {Venkataramanan, Shashanka and Kijak, Ewa and Amsaleg, Laurent and Avrithis, Yannis},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {6},

address = {New Orleans, LA, US},

year = {2022}

}Virtual Apr 2022

Metric learning involves learning a discriminative representation such that embeddings of similar classes are encouraged to be close, while embeddings of dissimilar classes are pushed far apart. State-of-the-art methods focus mostly on sophisticated loss functions or mining strategies. On the one hand, metric learning losses consider two or more examples at a time. On the other hand, modern data augmentation methods for classification consider two or more examples at a time. The combination of the two ideas is under-studied.

In this work, we aim to bridge this gap and improve representations using mixup, which is a powerful data augmentation approach interpolating two or more examples and corresponding target labels at a time. This task is challenging because, unlike classification, the loss functions used in metric learning are not additive over examples, so the idea of interpolating target labels is not straightforward. To the best of our knowledge, we are the first to investigate mixing both examples and target labels for deep metric learning. We develop a generalized formulation that encompasses existing metric learning loss functions and modify it to accommodate for mixup, introducing Metric Mix, or Metrix. We also introduce a new metric---utilization---to demonstrate that by mixing examples during training, we are exploring areas of the embedding space beyond the training classes, thereby improving representations. To validate the effect of improved representations, we show that mixing inputs, intermediate representations or embeddings along with target labels significantly outperforms state-of-the-art metric learning methods on four benchmark deep metric learning datasets.

@conference{C123,

title = {It Takes Two to Tango: Mixup for Deep Metric Learning},

author = {Venkataramanan, Shashanka and Psomas, Bill and Kijak, Ewa and Amsaleg, Laurent and Karantzalos, Konstantinos and Avrithis, Yannis},